There is not a new transport airplane built that does not rely heavily on computerized systems. Automation has been the “name of the game” for several decades now, with each new generation adding more to it. Pilots are now accustomed to operating aircraft that contain a great deal of automation. This has lead to well known phrases such as “the children of the magenta line,” referring to pilots who are focused on just following the automation, and more technical terms such as “automation dependency” and similar concepts. We all know what these terms mean, and we see it all the time, but is it really what we think it is? Is the problem really lack of basic stick and rudder skills or is something else afoot? Certainly, nobody would argue that stick and rudder skills do not become weaker as automation is used, but is that really the problem that leads “automation dependent” pilots to loss of control events?

The first aspect that I will discuss is what I see really occurring. I do not see pilots that are unable to fly the airplane. What I do see is pilots that keep messing with the automation in an attempt to “fix it” so it does what (think) they want. In that process they get distracted, lose focus and end up in unexpected scenarios. The key here is really ensuring strict discipline. The pilot-flying needs to focus on flying the airplane. The pilot-monitoring needs to ensure the pilot-flying is doing what they are supposed to do. My suggestion would be to teach both pilots to focus only on the aircraft path during any dynamic situation. Any changes to configuration, turns, initial climb and descents, altitude capture, etc., should involve both pilots focusing on flight instruments. Further, if something is not going as expected, immediately degrade the automation to a point where both pilots know with certainty what it will be doing next. That may involve turning off all the automation.

That brings us to the second issue that, although not a new one, really was highlighted with the Boeing Max accidents. This goes into pilots needing to have a fundamental idea of what computers are really doing, and what they are not doing. We tend to think of computers that are integrated into our aircraft as another hardware component. It does the job, or it is failed. Computers are different, but nobody is talking about it.

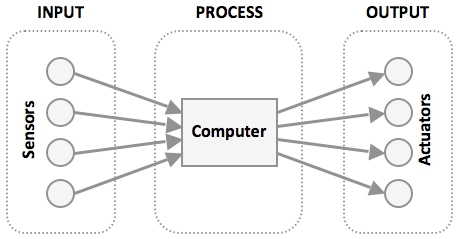

It might appear to be helpful to anthropomorphize the computer slightly to get a better sense of the situation, but that quickly becomes convoluted. Computers are quite simple “creatures” though. They are hooked up to sensors to “sense” the factors that the people who designed them deemed important. If the designer was a bit short of imagination then the computer might be missing vital information in some scenario that was beyond what the designer imagined. Assuming that the data is all being collected as designed, that data goes into the computer which uses a “process model” to decide what actions to take. The programmer has attached the output of the computer to various things that the computer is, literally, controlling. Unlike a living organism, a computer is totally unable to deviate from its programming. It cannot come up with a new or novel solution, it just simply follows its instructions. “I know xyz” and based on values of xyz I perform abc and that is all. There is no nuance here.

So what happens if the data coming in is flawed? What does the computer do? It does exactly what it was programmed to do, no more, no less. If the designer anticipated the data problem the computer should do something rational, for instance, it might stop all further actions and alert the pilot that it cannot do its job. However, if the designer did not anticipate it, then there is no way for the pilot, in real time, to be certain of what it might do. Sure, any person with knowledge of software could look at the code and the data and tell you what it might do in that scenario, but that is of little help when a new scenario is discovered mid-flight.

Now recall the data inputs on an aircraft system, say a flight control computer. It needs airspeed, angle of attack, flight control positions, flap positions, it might need CG, g-loading (Nz), mach numbers and more. It takes that information in, and then, based on what commands are given to it by the pilot, runs it through a process model and then out to the control surfaces it has control over. These can include elevators, ailerons, spoilers, rudders, flaps, slats, trim, etc. The output is dependent on the input coupled with the programming, which becomes the process model. OK, hold that thought for a moment.

What is your procedure if something happens on takeoff that is not in the books? No QRH, or at least, no immediate action items. Let’s say a sensor failure, a loss of angle of attack, a loss of the g-force or even the inability of the computer to read a flight control position. You have some sort of fault indication (maybe) right after V1, what would you do? Most procedures have you do something like the following: Continue the takeoff, positive rate, gear up, get to a safe altitude, clean it up, then troubleshoot (maybe), or perhaps just continue to the destination.

All nice, except for one little problem. That process model is flawed now due to bad data. The computer is unable to “know” the correct actions. As soon as you change any aspect then it might result in an unexpected outcome as the computer mixes the new information of what you have changed with the bad data and all that goes into the software for a new “decision” of what output to perform. This is, in a nutshell, what occurred on the Max accidents. Pilots retracted the flaps and BANG, MCAS was activated. A change coupled with a bad input in a scenario not anticipated in the design.

MCAS is not the only “gremlin” out there like this. There are others lurking. For example, something as seemingly innocuous as the pilot giving the computer commands in a way that was not anticipated by the designer can yield some unexpected outcomes. So what can a pilot do? One thought is to consider the way computers work. If the airplane is flying ok, maybe it is worth considering not changing anything. No change to the configuration, no change to anything that is within the pilot control. Just keep it flying, and when any changes are made, be prepared for an unexpected outcome.

In a legacy airplane this was no problem. Changing the flaps or the landing gear would be unlikely to entirely change the way the airplane handled. Changing the flaps would not suddenly trigger secondary systems in unexpected ways. That is no longer true. There is simply no way to train pilots to understand all the possible ways that every system might react to every circumstance. Arguably even the designers might not have thought about all of them. So I would argue that our procedures are not keeping up with the changes to our aircraft architecture. Until they get caught up, pilots need to have a much better understanding of how computers work and how they interact with the world around them.