For developers and engineers alike, WordPress is an excellent solution for building a versatile website that end-users can easily operate, maintain, and manage.

At Hostinger, we also build our pages and manage content using WordPress. However, our big concern was: Is WordPress reliable enough to handle up to millions of visitors?

Turns out, with the right scaling strategy, we can use WordPress as a basis for a resilient, agile, and highly available website, even during a traffic spike. Read on to learn how we do it.

The main blueprint

Our scaling strategy mainly centers around two technologies that are widely used in cloud infrastructure – Docker and Kubernetes.

Docker packages WordPress into containers. Meanwhile, we use Kubernetes to ‘orchestrate’ these containers, which automatically manage their load and lifecycles.

Let’s explore each of these technologies and their benefits for improving the scalability of our WordPress instance.

Containerization with Docker

Docker packages your WordPress into isolated environments called containers, each running independently from the others.

There are two ways to create a WordPress container – by manually writing a Dockerfile and downloading the official WordPress Docker image.

Using the official WordPress Docker image helps streamline the deployment process since you only need to simply build it into a container. If you use a Dockerfile, the basic content might look like this:

# Start with official Docker image of wordpressFROM wordpress:6.6.1-apache

# FROM wordpress:php8.1-fpm

# if we want to use php-fpm instead

# apt update and then install packages we might need

RUN apt update & \

apt install -y \

wget

# Replace php.ini

COPY php.ini /usr/local/etc/php

Here’s the role of Docker containerization in WordPress scaling strategy:

Portability

You can deploy containers on multiple platforms easily, including the development, staging, and production environments.

Since each container ships WordPress with the same packages, add-ons, and configurations, we can maintain consistent performance and compatibility when redeploying it on another machine.

Customization

You can modify the Dockerfile to add custom themes, plugins, and packages tailored to your website needs. Then, you can install them automatically as you build the container.

Without Docker, you must set up these themes, plugins, and packages individually after deploying a new WordPress instance. This takes a long time and is more prone to human error.

Configuration

Leverage the WORDPRESS_* environment variables to simplify configuration without directly modifying wp-config.php. This enables a consistent configuration across containers and makes customization safer since you don’t hard code the setting into the PHP file.

Reliability

Docker lets you create multiple identical instances of your WordPress website, which is the core of our horizontal scaling strategy.

Having multiple WordPress containers improves reliability since an instance can take over the operation when the main one crashes. A setup with several containers also enables you to distribute traffic more evenly for load balancing.

Deployment with Kubernetes

The key to making your WordPress instance highly scalable and resilient is utilizing Kubernetes’ container orchestration capabilities.

In Kubernetes, you deploy WordPress containers in pods – the smallest deployable units that can easily be scaled up and down to meet changing user demands.

We use pods to deploy and manage multiple identical WordPress instances with the same core files, configuration, and extensions. As explained before, this will be the basis of our horizontal scaling.

Our Kubernetes setup consists of the following:

Horizontal Pod Autoscaler (HPA)

We implement HPA to dynamically scale the pods according to traffic. When many users access our website, HPA allocates more pods to distribute the load more evenly and shuts down idle ones.

In the traditional WordPress setup, only a single instance handles all the traffic. Despite the higher resource pool, this is more unreliable since service limitation and latency can cause bottlenecks.

HPA works by tracking specific metrics on your server, which can be:

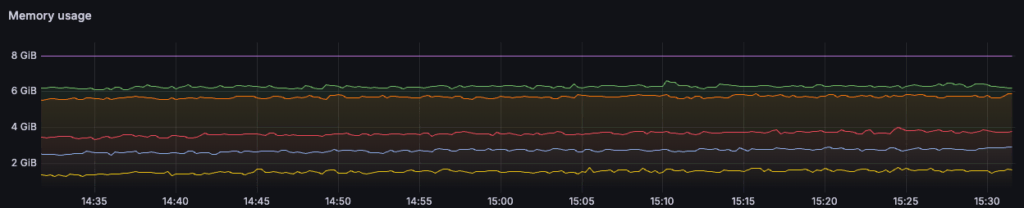

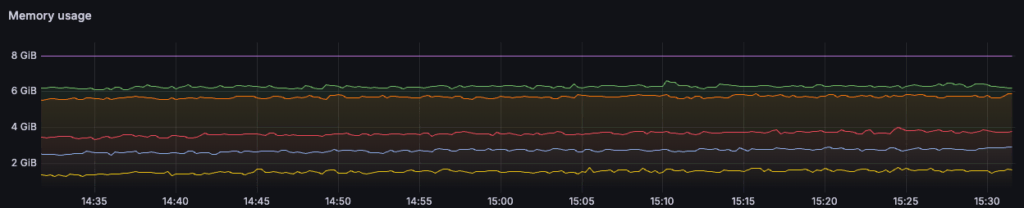

- Built-in metrics – resources utilization of the pod, which can be CPU usage or memory consumption. You can set a certain threshold that will trigger HPA to scale your application when the load meets that limit. For example, when the CPU usage exceeds 80%, HPA will run new pods to distribute the load or shut down pods when the usage falls under 50%.

- Custom metrics – HPA also supports custom metrics like Apache server data (for example, the number of active connections, request rate, or response times) or PHP FPM as a trigger for scaling. To integrate custom metrics into Kubernetes, you can use tools like Kubernetes Metrics Server or custom metrics adapters.

For a more specific tracking and scaling rule, you can also combine multiple metrics and set a specific number of pods to be activated for each of them.

HPA is useful not only for defining rules to scale your pods but also for predicting regular traffic patterns. This insight enables Kubernetes to scale the WordPress instance ahead of time to anticipate load surges.

Kubernetes Ingress

Ingress is a resource that manages external connections to your Kubernetes service clusters. It has several functions that are helpful for scaling WordPress:

- Load balancing – by default, all user requests are directed to a single back-end service. This can cause unreliability during traffic surges since only a single endpoint handles it. Ingress can act as a load balancer that will route requests directly to the appropriate service, making them more evenly distributed.

- SSL/TLS termination – Kubernetes ingress controllers can take care of the SSL/TLS encryption and decryption, which can be resource-intensive. This offloads the workload from the main WordPress application service so it can focus on the operational logic.

Environment variables and secrets

Saving sensitive information like access credentials in virtual environments is a security best practice. Since you don’t hardcode such information directly into your WordPress files, it is less likely to get leaked.

In Kubernetes, you can also store data in a small object called a secret, which you can then pass as environment variables in the pod through a volume. The YAML configuration might look as follows:

spec:containers:

env:

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: db-user

key: db-username

By default, storing data in secrets is more secure than using environment variables since it is independent of the pods. This means secrets and their data are less likely to be exposed when managing pods.

Data in secret is encoded into base64 strings and can be encrypted at rest. You can also make secrets immutable to prevent unauthorized modifications that can cause incidents.

Challenges and solutions

While Docker and Kubernetes are excellent solutions for scaling our WordPress deployment, we encountered several challenges when using these technologies. Here are some of the difficulties and how we managed to navigate through them:

Using Apache metrics with HPA

Kubernetes’ HPA works well when using built-in metrics like CPU or RAM usage. However, we found out that integrating a more specific external parameter (like the number of busy Apache workers or detailed PHP-FPM metrics) is quite challenging.

Using specific metrics to trigger the scaling requires setting up a custom exporter and exposing the data for Kubernetes to read.

A simpler solution for this problem is to use a third-party program that offers a tool for importing the metrics. One example is the Bitnami helm chart, which could enable Apache metric tracking on HPA out of the box.

Maintaining consistency between pods

When deploying WordPress on Kubernetes pods, you must ensure that each instance is identical. Each pod must have the same core files, plugins, media files, and other data.

In production, maintaining consistency across pods can be tedious since you can’t use a Docker image as you would during deployment.

To make things simpler, our solution was to set up a Network File System (NFS) storage for the plugins that each pod can read and write. This storage houses data that will update over time, like /var/www/html/wp-content/plugins.

For the NFS storage, you should use a cloud solution that can be bound to multiple pods, like AWS EFS or GCP Filestore.

To connect pods to your NFS storage, use the PersistentVolumeClaim request to specify the data endpoint. Then, assign the RWX (ReadWriteMany) permission to ensure your pods can access the data.

Your pods’ PersistentVolumeClaim and Deployment configuration might look as follows:

apiVersion: v1kind: PersistentVolumeClaim

metadata:

name: plugins

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-client

resources:

requests:

storage: 20G

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

spec:

...

volumeMounts:

- name: wordpress-plugins

mountPath: /var/www/html/wp-content/plugins

volumes:

- name: wordpress-plugins

persistentVolumeClaim:

claimName: plugins

Using a reliable database

Along with the WordPress instance, optimizing your database is important to prevent bottlenecks and data loss. The database must also be able to maintain its performance and uptime during high-traffic times.

We can achieve this using a robust database setup (preferably managed) with reliable safety features. For example, it should have automatic failover and built-in replication.

This configuration ensures data integrity and service availability even during failures or heavy loads.

Key takeaway

As our WordPress deployment grows, our main concern is how to scale it to handle millions of visitors. We found out that by using Docker and Kubernetes, we can create a scalable website that is agile and reliable, even under high load.

The main idea is to deploy multiple WordPress containers as pods, which we scale dynamically using Kubernetes’ HPA. This allows our setup to automatically distribute load evenly across pods to optimize resource usage.

Like us, you can optimize your own WordPress deployment even further by leveraging Kubernetes’ built-in features like ingress and custom HPA metrics. Paired with a robust cloud infrastructure for the database and dynamic data storage, you can set up a powerhouse to unleash WordPress’s full potential.