A first-of-its-kind in-depth GenAI benchmarking study out today (27 February) has measured the performance of four legal AI tools compared with a human lawyer control group, with Harvey receiving the highest overall score, followed by Thomson Reuters CoCounsel. The other two evaluated tools were Vincent AI from vLex and Oliver from Vecflow. Lexis+AI from LexisNexis withdrew from the report, in a step that will, rightly or wrongly, be interpreted as a fairly damning indictment of its results.

The report was conducted by Vals AI, a company that independently evaluates and benchmarks the performance of large language models across industry specific tasks to assess their accuracy and efficacy on real-world scenarios, as well as highlight the strengths and weaknesses of different LLMs. Vals AI was supported by Legaltech Hub as well as alternative legal services company Cognia, which assisted with the human review portion of the benchmarking exercise.

Underpinning the study was the principle that vendors had to voluntarily agree to be in the study and provide technical support to ensure that Vals AI was using the products correctly. Vendors were given the option that they could opt out once they had seen the results, but it would be made public that they withdrew.

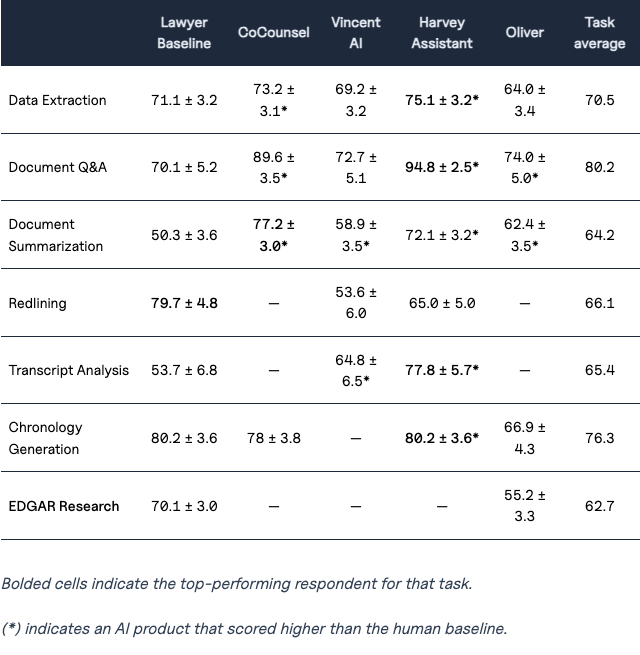

AI tools and the human control group (the “lawyer baseline”) were set seven tasks: data extraction; document Q&A; document summarisation; redlining; transcript analysis; chronology generation; and EDGAR research.

Harvey opted into all but EDGAR Research and received the top scores of all participating AI tools on five of those. The sixth task, document summarisation, went to CoCounsel.

CoCounsel was submitted for data extraction; document Q&A; document summarisation and chronology generation. It received high scores on all four tasks, particularly document Q&A, where it got 89.6% – the third highest overall score in the study.

The AI tools collectively surpassed the lawyer baseline on tasks related to document analysis, information retrieval, and data extraction.

This marks the first time that multiple law firms and vendors will have come together to objectively assess legal AI platform performance on real world examples of legal tasks. It follows an AI study by Stanford University last year that suggested legal LLMs hallucinate more than 17% of the time.

Vals had already begun its work when the Stanford report came out, and speaking to Legal IT Insider, Rayan Krishnan, a software engineer from Stanford Artificial Intelligence Laboratory who co-founded Vals AI in August 2023, said: “It left a lot of law firms very confused as to how to use the technology and was limiting adoption, so this motivated us to double down on our effort and produce an independent benchmark of the tools and overcome some of the core problems we saw with that Stanford study.”

Whereas the Standford tests were regarded as contrived and designed to trick the AI into failure, Krishnan told us: “We decided to start with the law firms and in particular we worked with 10 of the top US and UK law firms to collect questions from them on how they actually want to use the products, and that defines the use cases and the entire data set that we use for testing.”

Stanford didn’t obtain consent and tested the products without an official license to do so, whereas Krishnan says it was important to get permission and full product access. It was also important to have a human baseline to compare accuracy, and Krishnan says that this will also go towards helping the sector understand how lawyers may wish to work differently as a consequence of these tools, and what the opportunities will be to delegate key parts of legal services.

While the tools performed well with document analysis, information retrieval, and data extraction, more complicated tasks were chronology; redlining and transcript analysis. Only Oliver from Vecflow produced a score for EDGAR research.

There are few remaining interesting things to note. One is that Legal IT Insider conducted our own analysis of how public tools such as ChatGPT fare when asked legal questions that tripped up AI tools in the Stanford test, and it fared well. ChatGPT’s enterprise team wasn’t interested in taking part in this study, but it may be for the next.

The second is to note the impact that this study will have in helping law firms to move away from comparing AI tools with the ‘perfect lawyer’ – in the human baseline, their scores were anything but perfect. The tools also conducted the tasks much faster.

In terms of ‘what now’, Krishnan says: “I’m just fascinated because now there’s a line in the sand of roughly the things which you probably shouldn’t be doing as a human. You wouldn’t do a three digit by three digit multiplication by pen and paper; you’d use a calculator. And so similarly if you need a one paragraph summary, you’d toss that into a generic AI tool. But I think there are some more sensitive things, especially on redlining, where I wouldn’t want my lawyer using a tool like that out of the box.”

The hope now is that there will be more independent assessments that will help law firms that are inundated with new tools. “I think they will look to have a minimum baseline of having some checks done before they start a pilot,” said Krishnan. Vals worked in consultation with John Craske, chief innovation and knowledge officer at UK law firm CMS, who is leading a GenAI benchmarking initiative from LITIG, the UK Legal IT Innovators Group.

While law firms often want to be involved with early concepts and it would be unfortunate if this benchmark had a negative effect on new competition entering the market, the importance of the study cannot be underestimated, as the industry works towards transparency and being able to meaningfully measure and compare the performance of GenAI tools in the market.

LexisNexis told us in a statement: “The timing didn’t work for LexisNexis to participate in the Vals AI legal report. We deployed a significant product upgrade to Lexis+ AI, with our Protégé personalized AI assistant, which rendered the benchmarking analysis out-of-date and inaccurate.”

To access the report go to https://www.vals.ai/vlair