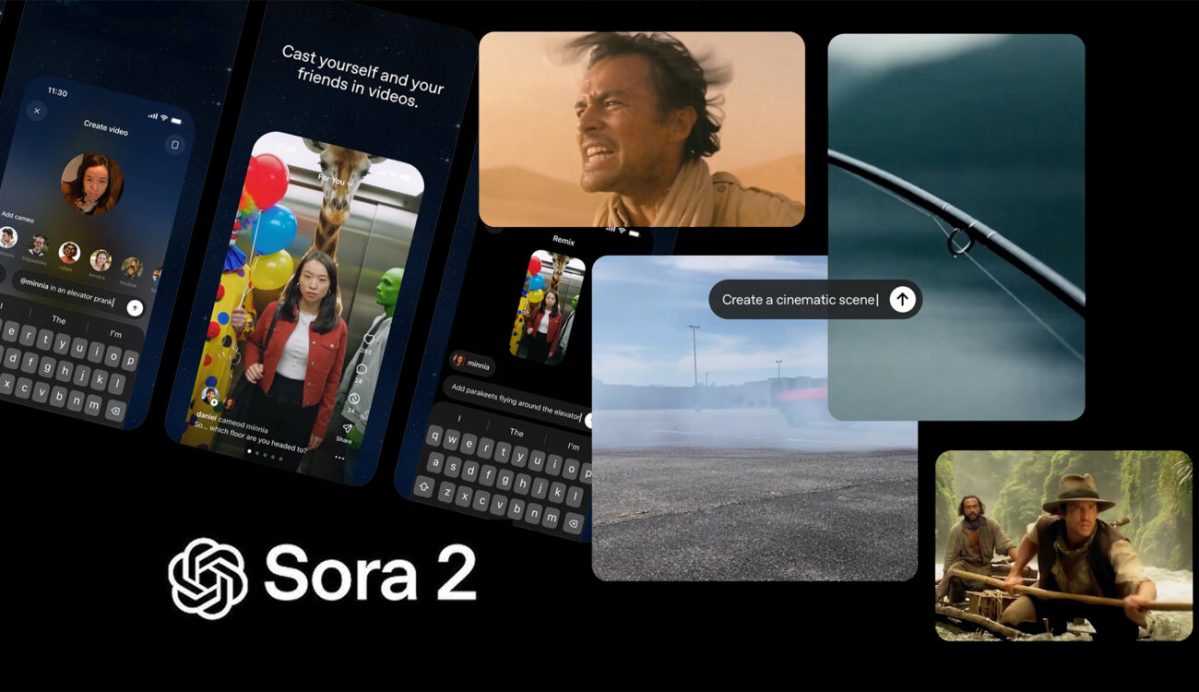

Something remarkable is happening online, and I don’t quite know how to explain it, but I’ll try. OpenAI has released Sora 2, the latest version of its video-generating tool, and it has landed with a bang. Before launch, Sam Altman made headlines by suggesting that copyright holders would need to opt out of having their works used for outputs, a statement that understandably set off alarm bells in some circles. I’ll admit that at first I assumed he had been misquoted. But then Sora 2 dropped, and it turned out his comment was actually an understatement. The tool arrived with practically no copyright filters whatsoever, and over the past couple of days we’ve seen copyrighted material used in ways that are, to put it mildly, eye-opening. Nazi SpongeBob; Rick and Morty selling crypto; shoplifting Pikachu; Wednesday Addams having dinner with Peter Griffin. The internet, of course, is doing what the internet does: testing the limits.

I have admit that I did not see this coming. After the Anthropic settlement, my assumption was that AI developers were going to try to settle most of the remaining cases while also pushing for copyright reform, while copyright holders were going to try to obtain licensing deals wherever possible. But Sora 2 is being pushed in a way that seems to assume that copyright has ceased to exist overnight. How can OpenAI justify doing this? What is the plan?

I can hear hundreds of lawsuits furiously being drafted as we speak, I can’t believe that Hollywood will let this one slide without a fight. Many of the videos I have seen can easily be classified as copyright infringement, so what’s the strategy to escape liability? Has Sam Altman lost his mind? I don’t think so. Here are my theories of what is going on.

1. Fair use and safe harbours

The most parsimonious explanation is that OpenAI are betting on fair use. The quality of some outputs suggests the model has been trained on vast amounts of video content from copyright holders worldwide, making the infringement risk sit squarely in the input phase. OpenAI’s lawyers may believe that this training qualifies as fair use, but given the companies involved, that seems like a very risky bet. A single strategically placed lawsuit could result in damages large enough to threaten the company’s existence.

Another possible legal explanation is that OpenAI are assuming potentially infringing outputs are covered by the DMCA’s safe harbour provisions. In short, these protect online service providers from liability for copyright infringement by their users, provided certain conditions are met. Intermediary platforms like YouTube or social media networks are not automatically liable if someone uploads infringing material, so long as the platform lacks actual knowledge, removes content expeditiously upon notice, and has clear policies for dealing with repeat infringers. This could explain Altman’s comments about opt-outs.

But again, this strategy rests on legal theories that have never been tested in this context. Risky.

2. Tacit or explicit agreement from rightsholders

Another simple explanation is that there are already agreements in place, allowing for a limited period of hype, much like what happened during the Ghiblicalypse. For a couple of weeks, the internet was Ghiblified, boosting Studio Ghibli’s attention before the trend faded. It’s possible that studios are banking on a similar short-term boost. Eventually, the Internet will grow tired of the novelty, and Sora videos of copyrighted characters will become as lame as a Ghiblified image is today.

People who think that video generation will not be adopted by Hollywood and other creative industries are hopelessly deluded. Corporations thrive on cost-cutting, and these tools will eventually be used precisely for that, and I would be surprised if negotiations are not already taking place to enable access to these tools. The short-term hype may be worth it in exchange for access to future technologies.

However, I really don’t think that this is very likely for all cases. All you need is for a corporation to decide not to play ball for the entire edifice to fall under eventual crippling copyright litigation.

3. Relying on politics

As I discussed earlier, the tech industry has openly embraced the Trump administration, both in donations and by appearing to repeatedly bend the knee to pledge fealty to the MAGA regime. One result of this strategy is that AI developers may be getting some form of assurance that they will not be affected by copyright lawsuits. Trump hates the Hollywood “elites”, so this could result in policies that favour the tech industry and punishes what they see as the out of touch woke gliteratti.

I think that some form of regulation or legislation that will make AI training immune from liability is still on the cards, my guess would be something akin to the aforementioned DMCA safe harbours.

However, this strategy is also risky if true. Trump is unreliable, fickle, and prone to favour whoever spoke to him last.

4. Settlements all the way down

While the Bartz v Anthropic agreement is now the largest copyright settlement in history, one could argue that it is peanuts when compared to what AI developers would have to pay for licensing agreements and negative copyright decisions. If you are a class litigant against an AI company and you’re offered a large payout in the billions of dollars, you are likely to take the money instead of relying on a dubious legal result that may no go your way. So OpenAI may have looked at the settlements and made a calculation that it is likely a manageable expenditure.

But this is another risky strategy, all it takes is for one litigant not to agree to a payout, and you’re in trouble.

5. AGI is coming

Finally, if you’re certain that Artificial General Intelligence is coming, then society will change in ways that we have not even begun to think about, and all existing legal frameworks may have to be changed. If OpenAI were to win the AGI race, then copyright would be nothing more than a footnote in the history books.

However, I don’t think that AGI is near.

6. ¯_(ツ)_/¯

We have no idea what is going on. Reality makes no sense. We’re living in a simulation. The aliens are coming. The world is about to end. AGI is already here.

Take your pick.

7. It’s all about the memes (edited)

We’re in an attention economy. This wins the attention wars. Copyright be damned.

Concluding

I have to admit that I’m not the biggest fan of video generation, and I’m particularly not a fan of using copyright characters in AI generation in general, I personally find it rather unimaginative. Presented with a technology that can generate anything that you can think of, I find it disappointing that many people will choose to use it to reproduce existing IP, why not try to come up with something new? But who am I to talk, most of my image generation experiments are about llamas and cats, so I cannot accuse anyone of being unoriginal.

But video generation is proving to be very popular, and the use of characters is also popular regardless of many people’s objections. I am surprised that OpenAI have been so carefree about outputs of copyright characters, and I still refuse to believe that this hasn’t been done with some sort of strategy in mind. I just don’t know what it is, only time will tell. But one thing is true, this could herald a lasting change of how we think about copyright in the future.

In the meantime, here is a video of a llama and a cat having coffee in the Andes. Enjoy.