Today, we

are going to decode a visual. It represents the principle of continuous

integration at AT Internet:

The main principles of continuous integration

It is a set

of practices designed to effectively deliver the software code produced by

development teams. There are various objectives, including:

- Limiting

manual actions by automating builds, deliveries and other deployment operations.

This limits the risk of human error and allows experts to focus on their

product rather than continually investing time in repetitive operations that a

machine can do for them.

- The

rapid availability of feedback offering a good visibility on the potential

impacts of changes made to the existing code. Each member of the development

teams can then calmly make changes to the existing code base and rely on the

automation in place to inform them of the proper integration of their code into

the product.

This

concerns the continuous integration of new code, continuous delivery of

deliverables and continuous deployment of products – but how do you link source

code, deliverables and environments in the overall process? Here are some of

the FAQs:

- When

I practice “promotion”, is it the promotion of my code? The promotion

of deliverables? The promotion of the environment? - What

is the link between the deployment in production and the master branch of code

repository? - What

automation can I implement to ensure consistency between the life of my code

repository and that of my application, across different environments?

These

questions may be answered differently depending on the technical or project

context, the sector of activity or the level of maturity of the processes in

the company. In this article, I will therefore try to describe the various concepts

and principles, without creating dependency on a particular tool. Even if some

tools can sometimes make life easier, it is more a question of the software

delivery cycle, regardless of the means chosen for its implementation.

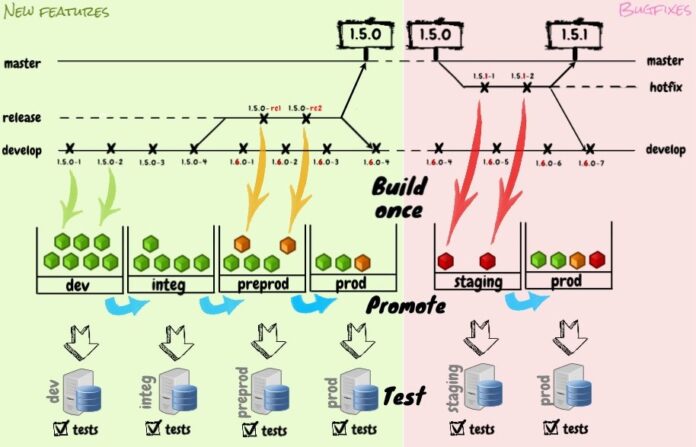

The “build once” concept

One of the

key principles of continuous integration is “build once” or in other

words, only build a new deliverable if the business code has been modified.

This practice is intended to reduce the risk of building a different

deliverable when deploying it in a new environment. Indeed, we find re-build

practices all too often when delivering, thinking of obtaining an identical

deliverable.

But there

are still risks (sometimes significant) during this new build:

- Machine problems (full disk,

other…) - Network issues

- Problem related to the build tool

(in maintenance, unavailable) - Embed a different version of one of

the dependencies of our product - Unintentionally rely on a different

version of the code

We can therefore, without realizing it, and

despite taking precautions, obtain a product different from the one that has

successfully passed the various validation phases of our development cycle. All

the benefits of the tests and verifications carried out are then lost.

The best way to avoid this risk is to build the

deliverable only once and then use it as it is, during future deployments, for

the validation phases and until final production – this is what is known as “build

once”.

The promotion of deliverables

Now that we

have our deliverable, we will want to subject it to a number of tests to be

able to validate its production launch. We therefore need different

“shelves” to store our deliverables in order to easily identify them

according to their progress in the validation phases.

These

“shelves” can take different forms depending on the technologies

(deliverable managers, delivery folders, archiving of artefacts, storage of

docker images, etc.) and their number depends on the chosen delivery cycle.

Some companies will need more validation and integration phases than others,

for reasons of compliance with certain standards, complex integrations etc.

At AT

Internet, we have chosen to have 4 “shelves” to organize our

deliverables (dev, integ, preprod, prod) and an additional one (staging) to

allow the urgent delivery of bugfixes.

The passage

from one deliverable from one shelf to another is conditioned by the proper

conduct of different test and validation phases. If all the conditions are met,

the deliverable can be moved to the next shelf: this is the promotion. Some

will simply copy (rather than move); some tools directly expose methods for

promoting the deliverables they host.

We then

have an overview of the deliverables and their level of validation, simply by

observing their place in the repository. The presence of a deliverable on one

of the shelves also describes its character as a candidate for a given

environment. It is this strong link that sometimes leads to the term

“environment promotion”.

Internal environments

Once these

deliverables have been obtained and stored somewhere, they must undergo the

various testing and validation steps to decide whether or not we can deliver

them to production. It is then necessary to deploy each deliverable on the

environment to which it is a candidate. It is the commissioning of the product,

on a given environment, to perform dynamic tests. These tests are called

“black box” tests, independent of the code or technology used for

implementation.

Development: the first phase of deployment

Each

deliverable is then deployed on the development environment and subjected to a

first phase of testing. The first of these tests consists in validating the

deployment mechanism. This is the first time we have tried to deploy this

deliverable somewhere. The successful completion of the deployment is a first

step to ensure that we are able to deliver the product to production.

We will

then validate on this environment a number of properties of our system

(functional or non-functional) but also the validity of some configurations of

our deliverable.

If everything is ok, then we can promote the

deliverable and deploy it to the next environment for the integration phase.

Integration: validate all scenarios

In this

phase, the system validated in isolation is brought into contact with the other

systems of the software solution. It can then be used in the system integration

testing phase, which aims to carry out business use cases that cross several

systems in order to validate the correct connection of the various elements of

the solution.

Each time

the validation on the development environment is successful, this integration

environment can be updated.

Pre-production: dress rehearsal

In

pre-production, we carry out a final phase of tests before going into

production, deploying only those features that we decide to go into production

with the next release. This decision is not a necessary step and is often

linked to a marketing or commercial decision.

In this

environment, these are often receipt and acceptance operations, which are not

intended to detect bugs in the product. If bugs are found at this stage, it is

often a sign of a lack of testing in one of the previous steps.

If delivery

in production is not linked to customer communication or support constraints,

it is entirely possible to have only one “pre-production”

environment, instead of integration and pre-production environments. We then

accept the constraint (or advantage) that all the validated code goes straight

into production if it successfully passes the following validation steps.

Staging

Staging

makes it possible to validate a correction to be made quickly in production.

The current production code will be deployed on this environment, with only the

correction made as a modification, in order to validate the correct correction

of the problem observed. The absence of side effects (regressions) of this

product modification is also checked.

Production

Some tests

of a different type can take place in production, often referred to as

“Post-Deployment Tests”. It is rather a question of validating

aspects related to the environment, specific configurations, monitoring systems

or what I would call functional experiments (canary testing, A/B testing,

feature flipping) etc….

At this

stage, the objective is not to detect any bugs in one of the systems involved

(even if this can happen!)

Delivery automation

This

orchestration of the delivery of a software product is intended to be

systematic and repetitive. It is therefore normal to think about automating all

this. Different tools are of course available to set up this orchestration

(Jenkins, Travis CI, GitlabCI etc…) but the principles are independent of the

choice of this tool. Beyond the essential steps of this automation (shown on

the right on the image linked to the article), it seems important to me to

highlight only a few details:

- Publication: it is the deposit of

the deliverable on one of the “shelves” for its future deployment on

one of the environments. The shelf chosen depends on the code branch

considered. Here are the identified publication paths:- Branch

“develop” => deliverables candidates for the “development”

environment

- Release’

branch => candidate deliverables for the pre-production environment

- Hotfix’

branch => deliverable candidates for the staging environment

- Any

other branch => no publication of the deliverable (it can still be retrieved

if desired for testing)

- Branch

- The promotion step can be used to

automate various operations. (some will prefer to keep control of these

operations or integrate them into the next step: the deployment phase)- Integration

promotion > pre-production- merge the code from

“develop” to “release”

- increment the minor version and

reset the patch version to ‘0’ on ‘develop’.

- merge the code from

- Pre-production

promotion > production- merge the release code to master

- apply a tag on ‘master’.

- Promotion

staging > production- merge the code from

“hotfix” to “master”

- apply a tag on ‘master’.

- merge the code from

- Integration

- At AT Internet, we use Jenkins and

one question often comes up in the teams: should we orchestrate all this into

one big job or into several separate jobs? The answer is somewhere in between

and depends on your progress in implementing the different steps of continuous

integration. However, some principles are important to consider:- The

deployment of an existing deliverable must be possible at any time, regardless

of the stages of construction of that deliverable.

- The

The launch of certain tests on a given

environment must be possible at any time, without depending on the stages of

construction and promotion of deliverables.

Dis-continuous integration

The ideal

flow that allows continuous delivery in production requires a certain number of

automatisms and tools that are safety ramparts that allow products to be

gradually validated until they are put into production. Some elements are often

missing in the chain to give full responsibility to scripts and other test

robots for the “decision” to deliver to production. Here is the order

in which these elements are often put in place, in the evolution of R&D:

- Construction

of the deliverable (build) - Unit

tests - Deployment

scripts - Code

quality - Triggering

of tests - Promotional

mechanisms

When one or

more of these elements are missing, the operation remains manual. The rest of

the operations are then also manually triggered, if automatisms are present to

continue. We are then in a situation that I would describe as dis-continuous

integration.

At a higher

level of maturity, all operations are automated, but we are not yet ready to do

without manual actions to trigger production releases. This is often the case

if all the mechanics are available but the tests on the different environments

are too limited. We still need to reassure ourselves with a few manual actions

to “receive” and increase our level of confidence.

Until the day when we can gladly realize that we

are only intervening to trigger further operations, simply based on the status

displayed by some systems. We can then link this operation to the rest of the

chain because our action no longer brings any added value. Moving to this stage

of continuous delivery also requires good production monitoring systems to be

confident that the system will be resilient to a failure that has gone through

the various validation steps without being detected. This may be the subject of

a future article!