Fine-tuning large language models (LLMs) is essential for optimizing their performance in specific tasks. OpenAI provides a robust framework for fine-tuning GPT models, allowing organizations to tailor AI behavior based on domain-specific requirements. This process plays a crucial role in LLM customization, enabling models to generate more accurate, relevant, and context-aware responses.

Fine-tuned LLMs can be applied in various scenarios such as financial analysis for risk assessment, customer support for personalized responses, and medical research for aiding diagnostics. They can also be used in software development for code generation and debugging, and legal assistance for contract review and case law analysis. In this guide, we’ll walk through the fine-tuning process using OpenAI’s platform and evaluate the fine-tuned model’s performance in real-world applications.

What is OpenAI Platform?

The OpenAI platform provides a web-based tool that makes it easy to fine-tune models, letting users customize them for specific tasks. It provides step-by-step instructions for preparing data, training models, and evaluating results. Additionally, the platform supports seamless integration with APIs, enabling users to deploy fine-tuned models quickly and efficiently. It also offers automatic versioning and model monitoring to ensure that models are performing optimally over time, with the ability to update them as new data becomes available.

Cost of Inference

Here’s how much it costs to train models on the OpenAI Platform.

| Model | Pricing | Pricing with Batch API | Training Pricing |

| gpt-4o-2024-08-06 | $3.750 / 1M input tokens$15.000 / 1M output tokens | $1.875 / 1M input tokens$7.500 / 1M output tokens | $25.000 / 1M training tokens |

| gpt-4o-mini-2024-07-18 | $0.300 / 1M input tokens$1.200 / 1M output tokens | $0.150 / 1M input tokens$0.600 / 1M output tokens | $3.000 / 1M training tokens |

| gpt-3.5-turbo | $3.000 / 1M training tokens$6.000 / 1M output tokens | $1.500 / 1M input tokens$3.000 / 1M output tokens | $8.000 / 1M training tokens |

For more information, visit this page: https://openai.com/api/pricing/

Fine Tuning a Model on OpenAI Platform

Fine-tuning a model allows users to customize models for specific use cases, improving their accuracy, relevance, and adaptability. In this guide, we focus on more personalized, accurate, and context-aware responses to customer service interactions.

By fine tuning a model on real customer queries and interactions, the businesses can enhance response quality, reduce misunderstandings, and improve overall user satisfaction.

Also Read: Beginner’s Guide to Finetuning Large Language Models (LLMs)

Now let’s see how we can train a model using the OpenAI Platform. We will do this in 4 steps:

- Identifying the dataset

- Downloading the dfinetuning data

- Importing and Preprocessing the Data

- Fine-tuning on OpenAI Platform

Let’s begin!

Step 1: Identifying the Dataset

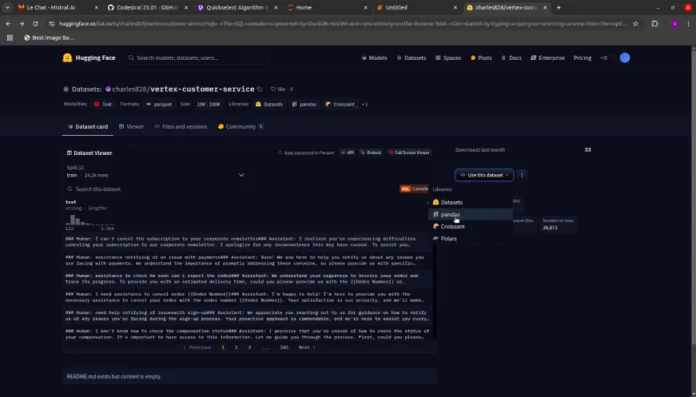

To fine-tune the model, we first need a high-quality dataset tailored to our use case. For this fine tuning process, I downloaded the dataset from Hugging Face, a popular platform for AI datasets and models. You can find a wide range of datasets suitable for fine-tuning by visiting Hugging Face Datasets. Simply search for a relevant dataset, download it, and preprocess it as needed to ensure it aligns with your specific requirements.

Step 2: Downloading the Dataset for Finetuning

The customer service data for the fine tuning process is taken from Hugging Face datasets. You can access it from here.

LLMs need data to be in a specific format for fine-tuning. Here’s a sample format for GPT-4o, GPT-4o-mini, and GPT-3.5-turbo.

{"messages": [{"role": "system", "content": "This is an AI assistant for answering FAQs."}, {"role": "user", "content": "What are your customer support hours?"}, {"role": "assistant", "content": "Our customer support is available 1 24/7. How else may I assist you?"}]}Now in the next step we will check what our data looks like and make the necessary adjustments if it is not in the required format.

Step 3: Importing and Preprocessing the Data

Now we will import the data and preprocess to to the required format.

To do this we will follow these steps:

1. Now we will load the data in the Jupyter Notebook and modify it to match the required format.

import pandas as pd

splits = {'train': 'data/train-00000-of-00001.parquet', 'test': 'data/test-00000-of-00001.parquet'}

df_train = pd.read_parquet("hf://datasets/charles828/vertex-ai-customer-support-training-dataset/" + splits["train"])

Here we have 6 different columns. But we need only need two – “instruction” and “response” as these are the columns that have customer queries and the relative responses in them.

Now we can use the above csv file to create a jsonl file as needed for fine-tuning.

import json

messages = pd.read_csv("training_data")

with open("query_dataset.jsonl", "w", encoding='utf-8') as jsonl_file:

for _, row in messages.iterrows():

user_content = row['instruction']

assintant_content = row['response']

jsonl_entry = {

"messages":[

{"role": "system", "content": "You are an assistant who writes in a clear, informative, and engaging style."},

{"role": "user", "content": user_content},

{"role": "assistant", "content": assintant_content}

]

}

jsonl_file.write(json.dumps(jsonl_entry) + '\n')As shown above, we can iterate through the data frame to create the jsonl file.

Here we are storing our data in a jsonl file format which is slightly different from json.

json stores data as a hierarchical structure (objects and arrays) in a single file, making it suitable for structured data with nesting. Below is an example of the json file format.

{

"users": [

{"name": "Alice", "age": 25},

{"name": "Bob", "age": 30}

]}jsonl consists of multiple json objects, each on a separate line, without arrays or nested structures. This format is more efficient for streaming, processing large datasets, and handling data line by line.Below is an example of the jsonl file format.

{"name": "Alice", "age": 25}

{"name": "Bob", "age": 30}Step 4: Fine-tuning on OpenAI Platform

Now, we will use this ‘query_dataset’ to fine-tune the GPT-4o LLM. To do this, follow the below steps.

1. Go to this website and sign in if you haven’t signed in already. Once logged in, click on “Learn more” to learn more about the fine-tuning process.

2. Click on ‘Create’ and a small window will pop up.

Here is a breakdown of the hyperparameters in the above image:

Batch Size: This refers to the number of training examples (data points) used in one pass (or step) before updating the model’s weights. Instead of processing all data at once, the model processes small chunks (batches) at a time. A smaller batch size will take more time but may create better models. You have to find right balance over here. While a larger one might be more stable but much faster.

Learning Rate Multiplier: This is a factor that adjusts how much the model’s weights change after each update. If it’s set high, the model might learn faster but could overshoot the best solution. If it’s low, the model will learn more slowly but might be more precise.

Number of Epochs: An “epoch” is one complete pass through the entire training dataset. The number of epochs tells you how many times the model will learn from the entire dataset. More epochs typically allow the model to learn better, but too many can lead to overfitting.

3. Select the method as ‘Supervised’ and the ‘Base Model’ of your choice. I have selected GPT-4o.

4. Upload the json file for the training data.

5. Add a ‘Suffix’ relevant to the task on which you want to fine-tune the model.

6. Choose the hyper-parameters or leave them to the default values.

7. Now click on ‘Create’ and the fine-tuning will start.

8. Once the fine-tuning is completed it will show as follows:

9. Now we can compare the fine-tuned model with the pre-existing model by clicking on the ‘Playground’ in the bottom right corner.

Important Note:

Fine-tuning duration and cost depend on the dataset size and model complexity. A smaller dataset, like 100 samples, costs significantly less but may not fine tune the model sufficiently, while larger datasets require more resources in terms of both time and money. In my case, the dataset had approximately 24K samples, so fine-tuning took around 7 to 8 hours and costed approximately $700.

Caution

Given the high cost, it’s recommended to start with a smaller dataset for initial testing before scaling up. Ensuring the dataset is well-structured and relevant can help optimize both performance and cost efficiency.

GPT-4o vs Finetuned GPT-4o Performance Check

Now that we have fine-tuned the model, we’ll compare its performance with the base GPT-4o and analyze responses from both models to see if there are improvements in accuracy, clarity, understanding, and relevance. This will help us determine if the fine-tuned model meets our specific needs and performs better in the intended tasks. For brevity i am showing you sample results of 3 prompts form both the fine tunned and standard GPT-4o model.

Query 1

Query: “Help me submitting the new delivery address”

Response by finetuned GPT-4o model:

Response by GPT-4o:

Comparative Analysis

The fine-tuned model delivers a more detailed and user-centric response compared to the standard GPT-4o. While GPT-4o provides a functional step-by-step guide, the fine-tuned model enhances clarity by explicitly differentiating between adding and editing an address. It is more engaging and reassuring to the user and offers proactive assistance. This demonstrates the fine-tuned model’s superior ability to align with customer service best practices. The fine-tuned model is therefore a stronger choice for tasks requiring user-friendly, structured, and supportive responses.

Query 2

Query: “I need assistance to change to the Account Category account”

Response by finetuned GPT-4o model:

Response by GPT-4o:

Comparative Analysis

The fine-tuned model significantly enhances user engagement and clarity compared to the base model. While GPT-4o provides a structured yet generic response, the fine-tuned version adopts a more conversational and supportive tone, making interactions feel more natural.

Query 3

Query: “i do not know how to update my personal info”

Response by finetuned GPT-4o model:

Response by GPT-4o:

Comparative Analysis

The fine-tuned model outperforms the standard GPT-4o by providing a more precise and structured response. While GPT-4o offers a functional answer, the fine-tuned model improves clarity by explicitly addressing key distinctions and presenting information in a more coherent manner. Additionally, it adapts better to the context, ensuring a more relevant and refined response.

Overall Comparative Analysis

| Feature | Fine-Tuned GPT-4o | GPT-4o (Base Model) |

| Empathy & Engagement | High – offers reassurance, warmth, and a personalized touch | Low – neutral and formal tone, lacks emotional depth |

| User Support & Understanding | Strong – makes users feel supported and valued | Moderate – provides clear guidance but lacks emotional connection |

| Tone & Personalization | Warm and engaging | Professional and neutral |

| Efficiency in Information Delivery | Clear instructions with added emotional intelligence | Highly efficient but lacks warmth |

| Overall User Experience | More engaging, comfortable, and memorable | Functional but impersonal and transactional |

| Impact on Interaction Quality | Enhances both effectiveness and emotional resonance | Focuses on delivering information without emotional engagement |

Conclusion

In this case fine-tuning the models to respond better to the customer queries their effectiveness . It makes interactions feel more personal, friendly, and supportive, which leads to stronger connections and higher user satisfaction. While base models provide clear and accurate information, they can feel robotic and less engaging. Fine tuning the models through OpenAI’s convenient web platform is a great way to build custom large language models for domain specific tasks.

Frequently Asked Questions

A. Fine-tuning is the process of adapting a pre-trained AI model to perform a specific task or exhibit a particular behavior by training it further on a smaller, task-specific dataset. This allows the model to better understand the nuances of the task and produce more accurate or tailored results.

A. Fine-tuning enhances a model’s performance by teaching it to better handle the specific requirements of a task, like adding empathy in customer interactions. It helps the model provide more personalized, context-aware responses, making interactions feel more human-like and engaging.

A. Fine-tuning models can require additional resources and training, which may increase the cost. However, the benefits of a more effective, user-friendly model often outweigh the initial investment, particularly for tasks that involve customer interaction or complex problem-solving.

A. Yes, if you have the necessary data and technical expertise, you can fine-tune a model using machine learning frameworks like Hugging Face, OpenAI, or others. However, it typically requires a strong understanding of AI, data preparation, and training processes.

A. The time required to fine-tune a model depends on the size of the dataset, the complexity of the task, and the computational resources available. It can take anywhere from a few hours to several days or more for larger models with vast datasets.