Google has expanded their Gemini 2.0 family with a bunch of new experimental models. The Gemini 2.0 Pro Experimental is specifically designed to handle complex tasks with ease and superior performance. This new model from Google is giving a tough competition to OpenAI’s o3-mini, especially in advanced coding and reasoning tasks. In this battle of Google Gemini 2.0 Pro Experimental vs OpenAI o3-mini, we will be testing them on three different coding tasks, ranging from creating simple javascript animations to building Python games. So let the contest begin and may the best coder win!

What is Google Gemini 2.0 Pro Experimental?

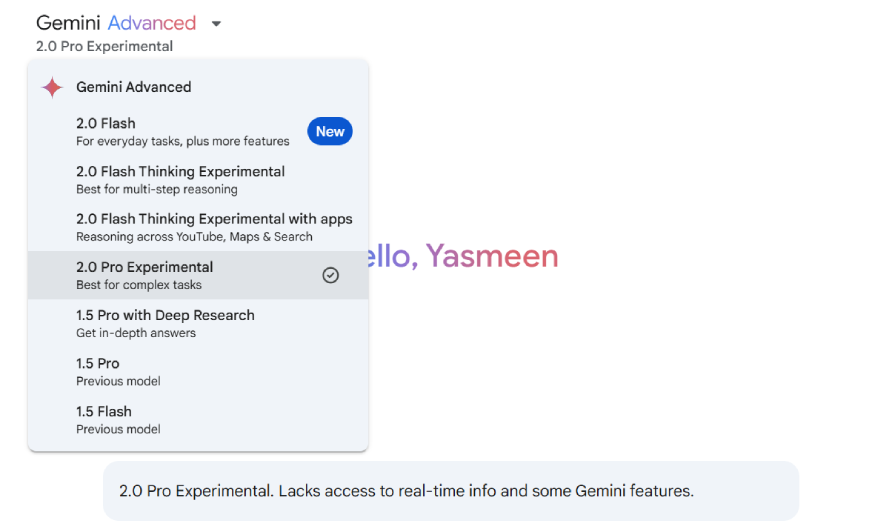

Google’s Gemini 2.0 Pro Experimental is Google’s latest advancement in AI models. This new model is designed to handle complex tasks and demonstrates superior performance in coding, enhanced reasoning, and comprehension. With a context window of up to 2 million tokens, this experimental version of Gemini 2.0 Pro, excels in understanding and processing intricate prompts. Moreover, it integrates with tools like Google Search and code execution environments to provide accurate and up-to-date information.

This experimental model is now available in Google AI Studio, Vertex AI, and the Gemini app for Gemini Advanced users.

What is OpenAI o3-mini?

o3-mini is a streamlined version of OpenAI’s upcoming o3 model, known to be its most efficient and advanced reasoning model yet. This compact yet powerful reasoning model is designed to enhance performance in tasks such as coding, mathematics, and science. While it offers faster and more accurate responses compared to its predecessor, o1-mini, it also boasts a high variant, specifically trained for coding and logic.

o3-mini is now available to both free and paid users on the ChatGPT interface and associated API services. Free users have rate-limited access, while paid users can opt for the premium variant for enhanced performance.

Gemini 2.0 Pro Experimental vs o3-mini: Benchmark Comparison

Now, let’s start with the comparisons. In this section, we will be looking into the performances of both Gemini 2.0 Pro Experimental and o3-mini on standard coding benchmark tests. For this comparison, we will look into the scores of these models on various tasks on the LiveBench Leaderboard.

| Model | Organization | Global Average | Reasoning Average | Coding Average | Mathematics Average | Data Analysis Average | Language Average | IF Average |

| o3-mini-medium | OpenAI | 70.01 | 86.33 | 65.38 | 72.37 | 66.56 | 46.26 | 83.16 |

| o3-mini-low | OpenAI | 62.45 | 69.83 | 61.46 | 63.06 | 62.04 | 38.25 | 80.06 |

| o3-mini-high | OpenAI | 75.88 | 89.58 | 82.74 | 77.29 | 70.64 | 50.68 | 84.36 |

| gemini-2.0-pro-exp-02-05 | 65.13 | 60.08 | 63.49 | 70.97 | 68.02 | 44.85 | 83.38 |

Source: livebench.ai

Also Read: Is OpenAI’s o3-mini Better Than DeepSeek-R1?

Gemini 2.0 Pro Experimental vs o3-mini: Performance Comparison

Now, let’s get to the actual coding battle! We will now test both the models on actual coding tasks and compare their responses to see who performs better. Since Gemini 2.0 Pro Experimental is Google’s best model for complex coding tasks, we will be testing it against OpenAI’s best coding model, o3-mini (high). We will be testing them on:

- Designing a Javascript Animation

- Building a Physics Simulation Using Python

- Creating a Pygame

For each of these tasks, we’ll be comparing the output of the codes generated by either model and score them 0 or 1. So let’s start with the first one.

Task 1: Designing a Javascript Animation

Prompt: “Create a javascript animation where the word “CELEBRATE” is at the centre with fireworks happening all around it.”

Response by o3-mini (high)

Response by Gemini 2.0 Pro Experimental

Output of generated codes

| Model | Video |

|---|---|

| OpenAI o3-mini (high) | |

| Gemini 2.0 Pro Experimental |

Comparative Analysis

OpenAI o3-mini (high) creates a stunning visual of a glowing signage reading ‘CELEBRATE’ and multicoloured fireworks. In comparison, Gemini 2.0 Pro’s output looked too basic with the fireworks looking more like splats of coloured water. For the richer visual, I choose o3-mini as the winner in this task.

Score: Gemini 2.0 Pro Experimental: 0 | o3-mini: 1

Task 2: Building a Physics Simulation Using Python

Prompt: “Write a python program that shows a ball bouncing inside a spinning pentagon, following the laws of Physics, increasing its speed every time it bounces off an edge.”

Response by o3-mini (high)

Response by Gemini 2.0 Pro Experimental

Output of generated codes

| Model | Video |

|---|---|

| OpenAI o3-mini (high) | |

| Gemini 2.0 Pro Experimental |

Comparative Analysis

Gemini 2.0 Pro’s output seems to have gone a bit haywire in this task. Although the visual starts off correctly, beyond a certain speed, the ball moves out of the pentagon and then shifts from corner to corner. This was unexpected. Meanwhile, OpenAI’s o3-mini creates an accurate visual of what is asked in the prompt. The ball bounces off at an increasing speed and ends when it reached top speed. I guess o3-mini is a clear winner here.

Score: Gemini 2.0 Pro Experimental: 0 | o3-mini: 2

Task 3: Creating a Pygame

Prompt: “I am a beginner at coding. Write me a code to create an autonomous snake game where 10 snakes compete with each other. Make sure all the snakes are of different colour.”

Response by o3-mini (high)

Response by Gemini 2.0 Pro Experimental

Output of generated codes

| Model | Video |

|---|---|

| OpenAI o3-mini (high) | |

| Gemini 2.0 Pro Experimental |

Comparative Analysis

Both the models have created very similar games, where there are 10 snakes of different colours going after the same food. However the experimental version of Gemini 2.0 Pro added a clear scoring chart at the end of the game, adding to an actual game-viewing experience. The grid drawn in the background also helps the viewer follow the movement of the snakes. Meanwhile, o3-mini’s snake game seems to end abruptly. Hence, Gemini 2.0 Pro Experimental wins this round!

Score: Gemini 2.0 Pro Experimental: 1 | o3-mini: 2

Final Score: Gemini 2.0 Pro Experimental: 1 | o3-mini: 2

Conclusion

Both Google’s Gemini 2.0 Pro Experimental and OpenAI’s o3-mini have showcased impressive coding capabilities across all tasks. While Gemini 2.0 Pro Experimental aced the snake game with added features like the scoring chart and grid visualizations, the overall performance tilted in favour of o3-mini. OpenAI’s new model just swept off the points, delivering superior results in both the Javascript animation and the Python physics simulation. This head-to-head comparison not only highlights the rapid advancements in AI-driven coding but also sets the stage for further innovations that will continue to empower developers at every skill level.

Frequently Asked Questions

A. Google Gemini 2.0 Pro Experimental is Google’s latest advanced AI model designed to handle complex tasks. It has enhanced coding, reasoning, and comprehension abilities. It features a context window of up to 2 million tokens and integrates with tools like Google Search and code execution environments.

A. OpenAI o3-mini is a streamlined version of OpenAI’s forthcoming o3 model, optimized for efficient reasoning and advanced coding tasks. The model is available in different variants, with the high variant specifically trained to excel in coding, logic, and other complex challenges.

A. Google’s Gemini 2.0 Pro Experimental is available via platforms such as Google AI Studio, Vertex AI, and to Gemini Advanced users on the Gemini app.

A. OpenAI’s o3-mini is accessible through the ChatGPT interface and via API services, with different levels of access for free users and premium subscribers.