While working on Agentic AI, developers often find themselves navigating the trade-offs between speed, flexibility, and resource efficiency. I have been exploring the Agentic AI framework and came across Agno (earlier it was Phi-data). Agno is a lightweight framework for building multi-modal Agents. They are claiming to be ~10,000x faster than LangGraph and ~50x less memory than LangGraph. Sound intriguing right?

Agno and LangGraph—offer very different experiences. After going hands-on with Agno and comparing its performance and architecture to LangGraph, here’s a breakdown of how they differ, where each one shines, and what Agno brings to the table.

TL;DR

- Building TriSage and Marketing Analyst Agent

- Use Agno if you want speed, low memory use, multimodal capabilities, and flexibility with models/tools.

- Use LangGraph if you prefer flow-based logic, or structured execution paths, or are already tied into LangChain’s ecosystem.

The Agno: What it Offers?

Agno is designed with a laser focus on performance and minimalism. At its core, Agno is an open-source, model-agnostic agent framework built for multimodal tasks—meaning it handles text, images, audio, and video natively. What makes it unique is how light and fast it is under the hood, even when orchestrating large numbers of agents with added complexity like memory, tools, and vector stores.

Key strengths that stand out:

- Blazing Instantiation Speed: Agent creation in Agno clocks in at about 2μs per agent, which is ~10,000x faster than LangGraph.

- Featherlight Memory Footprint: Agno agents use just ~3.75 KiB of memory on average—~50x less than LangGraph agents.

- Multimodal Native Support: No hacks or plugins—Agno is built from the ground up to work seamlessly with various media types.

- Model Agnostic: Agno doesn’t care if you’re using OpenAI, Claude, Gemini, or open-source LLMs. You’re not locked into a specific provider or runtime.

- Real-Time Monitoring: Agent sessions and performance can be observed live via Agno, which makes debugging and optimization much smoother.

Hands-On with Agno: Building TriSage Agent

Using Agno feels refreshingly efficient. You can spin up entire fleets of agents that not only operate in parallel but also share memory, tools, and knowledge bases. These agents can be specialized and grouped into multi-agent teams, and the memory layer supports storing sessions and states in a persistent database.

What’s really impressive is how Agno manages complexity without sacrificing performance. It handles real-world agent orchestration—like tool chaining, RAG-based retrieval, or structured output generation—without becoming a performance bottleneck.

If you’ve worked with LangGraph or similar frameworks, you’ll immediately notice the startup lag and resource consumption that Agno avoids. This becomes a critical differentiator at scale. Let’s build the TriSage Agent.

Installing Required Libraries

!pip install -U agno

!pip install duckduckgo-search

!pip install openai

!pip install pycountryThese are shell commands to install the required Python packages:

- agno: Core framework used to define and run AI agents.

- duckduckgo-search: Lets agents use DuckDuckGo to search the web.

- openai: For interfacing with OpenAI’s models like GPT-4 or GPT-3.5.

- pycountry: (Probably not used here, but installed) helps handle country data.

Required Imports

from agno.agent import Agent

from agno.models.openai import OpenAIChat

from agno.tools.duckduckgo import DuckDuckGoTools

from agno.tools.googlesearch import GoogleSearchTools

from agno.tools.dalle import DalleTools

from agno.team import Team

from textwrap import dedentAPI Key Setup

from getpass import getpass

OPENAI_KEY = getpass('Enter Open AI API Key: ')

import os

os.environ['OPENAI_API_KEY'] = OPENAI_KEY- getpass(): Secure way to enter your API key (so it’s not visible).

- The key is then stored in the environment so that the

agnoframework can pick it up when calling OpenAI’s API.

web_agent – Searches the Web, writer_agent – Writes the Article, image_agent – Creates Visuals

web_agent = Agent(

name="Web Agent",

role="Search the web for information on Eiffel tower",

model=OpenAIChat(id="o3-mini"),

tools=[DuckDuckGoTools()],

instructions="Give historical information",

show_tool_calls=True,

markdown=True,

)

writer_agent = Agent(

name="Writer Agent",

role="Write comprehensive article on the provided topic",

model=OpenAIChat(id="o3-mini"),

tools=[GoogleSearchTools()],

instructions="Use outlines to write articles",

show_tool_calls=True,

markdown=True,

)

image_agent = Agent(

model=OpenAIChat(id="gpt-4o"),

tools=[DalleTools()],

description=dedent("""\

You are an experienced AI artist with expertise in various artistic styles,

from photorealism to abstract art. You have a deep understanding of composition,

color theory, and visual storytelling.\

"""),

instructions=dedent("""\

As an AI artist, follow these guidelines:

1. Analyze the user's request carefully to understand the desired style and mood

2. Before generating, enhance the prompt with artistic details like lighting, perspective, and atmosphere

3. Use the `create_image` tool with detailed, well-crafted prompts

4. Provide a brief explanation of the artistic choices made

5. If the request is unclear, ask for clarification about style preferences

Always aim to create visually striking and meaningful images that capture the user's vision!\

"""),

markdown=True,

show_tool_calls=True,

)Combine into a Team

agent_team = Agent(

team=[web_agent, writer_agent, image_agent],

model=OpenAIChat(id="gpt-4o"),

instructions=["Give historical information", "Use outlines to write articles","Generate Image"],

show_tool_calls=True,

markdown=True,

)Run the Whole Thing

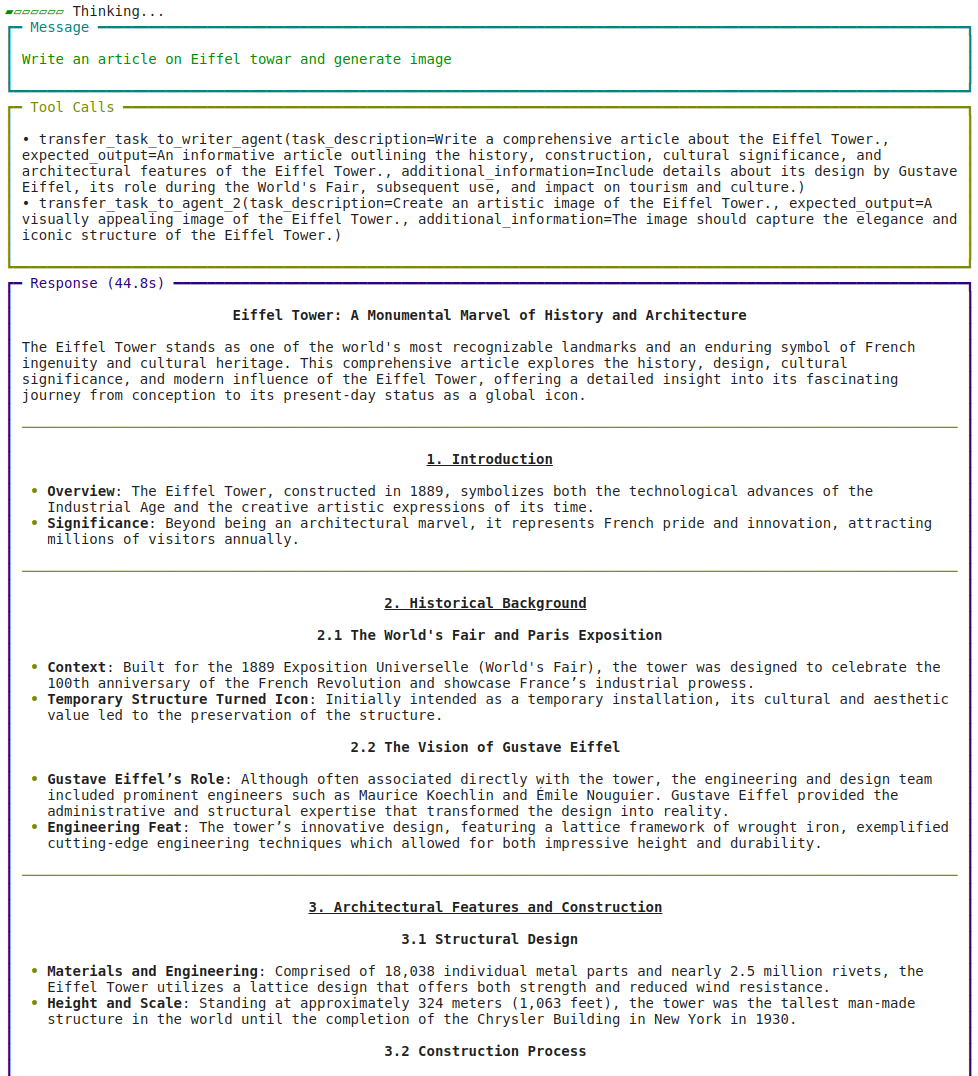

agent_team.print_response("Write an article on Eiffel towar and generate image", stream=True)Output

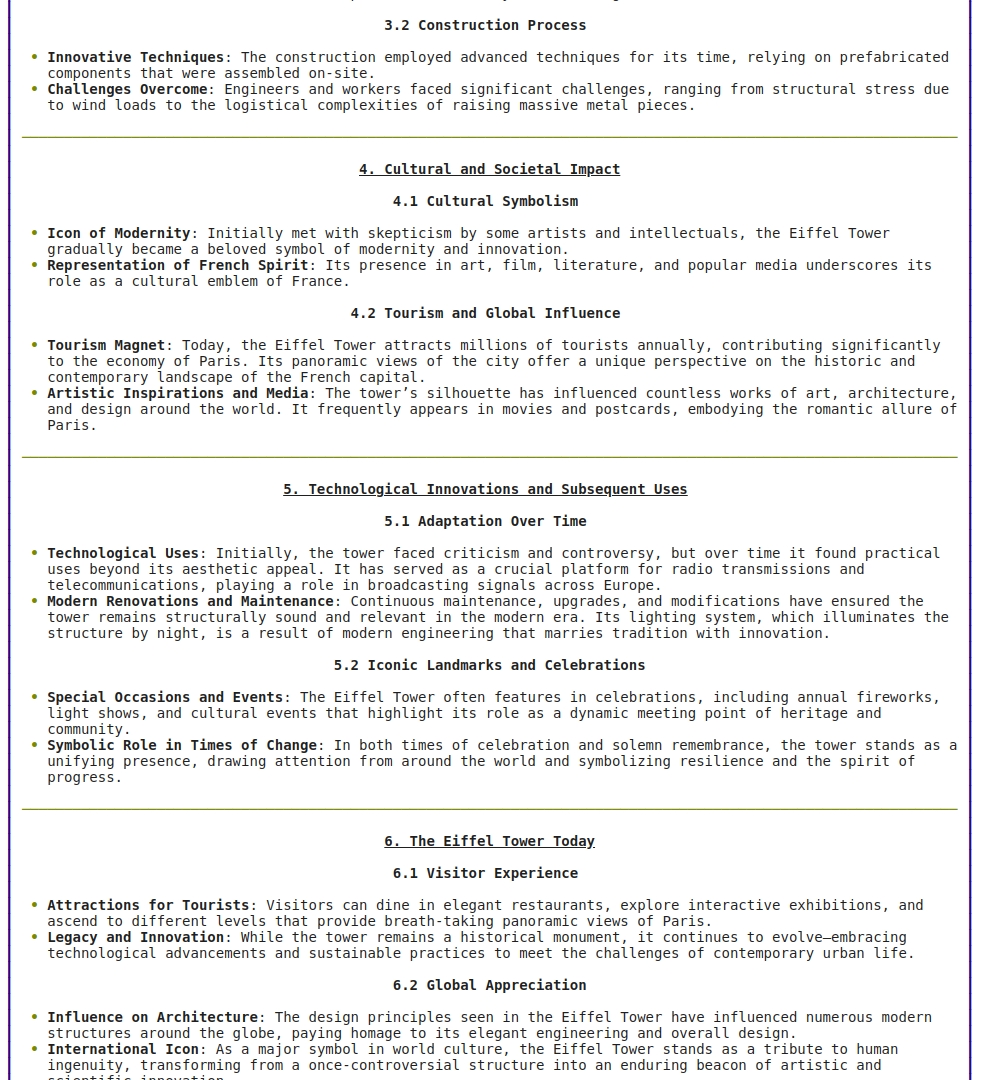

Continued Output

Continued Output

I have created a realistic image of the Eiffel Tower. The image captures the

tower's full height and design, ┃

┃ beautifully highlighted by the late afternoon sun. You can view it by

clicking here.

Image Output

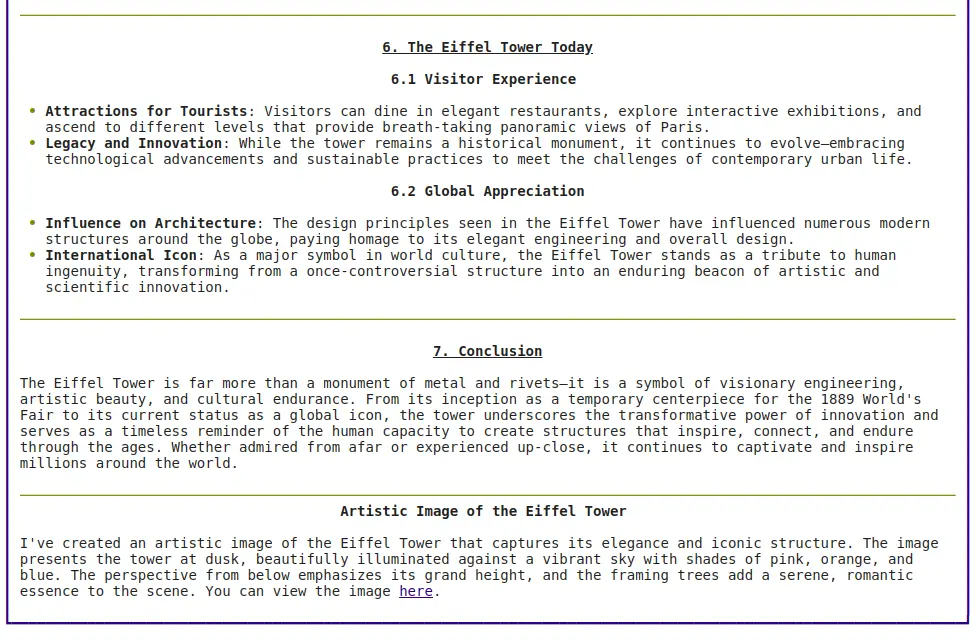

Hands-On with Agno: Building Market Analyst Agent

This Market Analyst Agent is a team-based system using Agno, combining a Web Agent for real-time info via DuckDuckGo and a Finance Agent for financial data via Yahoo Finance. Powered by OpenAI models, it delivers market insights and AI company performance using tables, markdown, and source-backed content for clarity, depth, and transparency.

from agno.agent import Agent

from agno.models.openai import OpenAIChat

from agno.tools.duckduckgo import DuckDuckGoTools

from agno.tools.yfinance import YFinanceTools

from agno.team import Team

web_agent = Agent(

name="Web Agent",

role="Search the web for information",

model=OpenAIChat(id="o3-mini"),

tools=[DuckDuckGoTools()],

instructions="Always include sources",

show_tool_calls=True,

markdown=True,

)

finance_agent = Agent(

name="Finance Agent",

role="Get financial data",

model=OpenAIChat(id="o3-mini"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True)],

instructions="Use tables to display data",

show_tool_calls=True,

markdown=True,

)

agent_team = Agent(

team=[web_agent, finance_agent],

model=OpenAIChat(id="gpt-4o"),

instructions=["Always include sources", "Use tables to display data"],

show_tool_calls=True,

markdown=True,

)

agent_team.print_response("What's the market outlook and financial performance of top AI companies of the world?", stream=True)Output

Agno vs LangGraph: Performance Showdown

Let’s get into specifics and it is all included in the official documentation of Agno:

| Metric | Agno | LangGraph | Factor |

|---|---|---|---|

| Agent Instantiation Time | ~2μs | ~20ms | ~10,000x faster |

| Memory Usage per Agent | ~3.75 KiB | ~137 KiB | ~50x lighter |

- The performance testing was done on an Apple M4 MacBook Pro using Python’s tracemalloc for memory profiling.

- Agno measured the average instantiation and memory usage over 1000 runs, isolating the Agent code to get a clean delta.

This kind of speed and memory efficiency isn’t just about numbers—it’s the key to scalability. In real-world agent deployments, where thousands of agents may need to spin up concurrently, every millisecond and kilobyte matters.

LangGraph, while powerful and more structured for certain flow-based applications, tends to struggle under this kind of load unless heavily optimized. That might not be an issue for low-scale apps, but it becomes expensive fast when running production-scale agents.

So… Is Agno Better Than LangGraph?

Not necessarily. It depends on what you’re building:

- If you’re working on flow-based agent logic (think: directed graphs of steps with high-level control), LangGraph might offer a more expressive structure.

- But if you need ultra-fast, low-footprint, multimodal agent execution, especially in high-concurrency or dynamic environments, Agno wins by a mile.

Agno clearly favours speed and system-level efficiency, whereas LangGraph leans into structured orchestration and reliability. That said, Agno’s developers themselves acknowledge that accuracy and reliability benchmarks are just as important—and they’re currently in the works. Until those are out, we can’t conclude correctness or resilience under edge cases.

Also read: Smolagents vs LangGraph: A Comprehensive Comparison of AI Agent Frameworks

Conclusion

From a hands-on perspective, Agno feels ready for real workloads, especially for teams building agentic systems at scale. It’s real-time performance monitoring, support for structured output, and ability to plug in memory + vector knowledge make it a compelling platform for building robust applications quickly.

LangGraph isn’t out of the race—its strength lies in clear, flow-oriented control logic. But if you’re hitting scaling walls or need to run thousands of agents without melting your infrastructure, Agno is worth a serious look.

Login to continue reading and enjoy expert-curated content.