Have you ever used Zepto for ordering groceries online? You must have seen that if you even write a wrong word or misspell a name, Zepto still understands and shows you the perfect results that you were looking for. Users typing “kele chips” instead of “banana chips” struggle to find what they want. Misspellings and vernacular queries lead to poor user experience and reduced conversions. Zepto’s data science team built a robust system to tackle this problem using LLM and RAG to fix multilingual misspellings. In this guide, we will be replicating this end-to-end feature from fuzzy query to corrected output. This guide explains how tech matters in search quality and multilingual query resolution.

Understanding Zepto’s System

Technical Flow

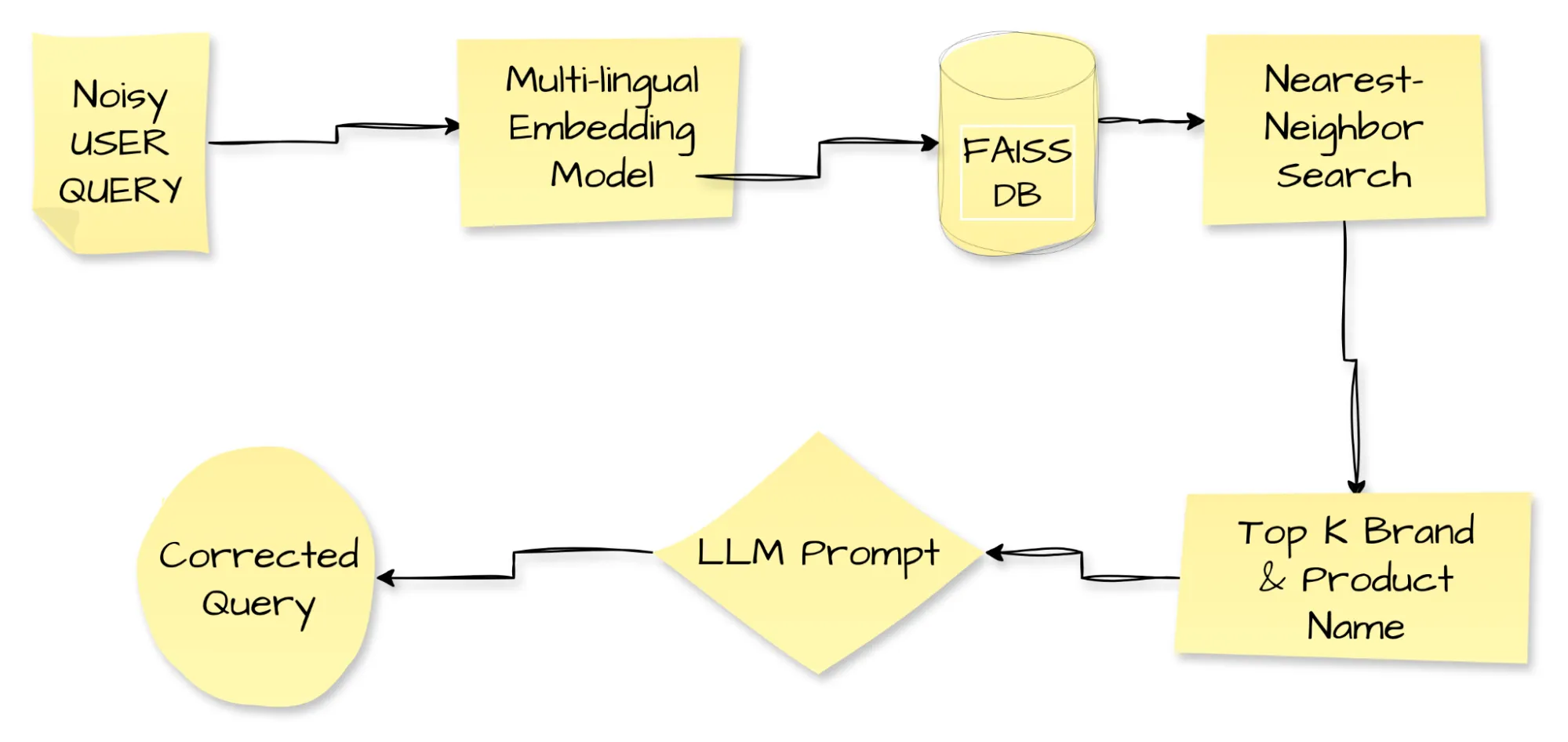

Let’s understand the technical flow that Zepto is using for its multilingual query resolution. This flow involves several components that we will walk through in some time.

The diagram traces a noisy user query through its full correction journey. The misspelled or vernacular text enters the pipeline; a multilingual embedding model converts it into a dense vector. The system feeds this vector into FAISS, Facebook’s similarity-search engine, which returns the top K brand and product names that sit closest in embedding space. Next, the pipeline forwards both the noisy query and the retrieved names to an LLM prompt, and the LLM outputs a clean, corrected query. Zepto deploys this query-resolution loop to sharpen user experience and lift conversions. Handling incorrect spellings, code-mixed phrases, and regional languages, Zepto logged a 7.5 % jump in conversion rates for affected queries, a clear demonstration of technology’s power to elevate everyday interactions.

Core Components

Let’s now focus on the core concepts that we are using in this system.

1. Misspelled Queries & Vernacular Queries

Users often type vernacular terms using a mix of English and regional words in one query. For example, “kele chips” (“banana chips”), “balekayi chips” (Kannada), etc. Phonetic typing, like “kothimbir” (phonetically typed Marathi/Hindi word for coriander) or “paal” for milk in Tamil, makes the traditional search struggle here. The meaning gets lost without normalization or transliteration support.

2. RAG (Retrieval-Augmented Generation

RAG is a pipeline that combines semantic retrieval (vector embeddings and metadata lookup) with LLM generation capabilities. Zepto utilised RAG functionality to retrieve the top k relevant product names and brands when receiving a noisy, misspelled, and vernacular query. Then, these most similar retrieved product or brand names are fed to LLMs along with the noisy query for correction.

Benefits of using RAG in Zepto’s use case:

- Grounds LLM by preventing hallucination by providing context.

- Improves accuracy & ensures relevant brand-term corrections.

- Reduces prompt size and inference cost by narrowing context.

3. Vector Database

A Vector database is a specialized type of database designed to store, index word or sentence embeddings, which are numerical representations of data points. These vector databases are used to retrieve high-dimensional vectors using a similarity search when given a query. FAISS is an open-source library specifically designed for efficient similarity search and clustering of dense vectors in an efficient manner. FAISS is used for quickly searching for similar embeddings of multimedia documents. In Zepto’s system, they are using FAISS to store the embeddings of their brand names, tags, and product names.

4. Stepwise Prompting & JSON Output

Zepto’s flow mentions a modular prompt breakdown whose main motive is to break down the complex task into small stepwise tasks and then perform it efficiently without any mistakes, improving accuracy. It involves detecting if the query is misspelled or vernacular, correcting the terms, translating to English canonical terms, and outputting as a JSON structure.

JSON schema ensures reliability and readability, for example:

{

"original_query": "...",

"corrected_query": "...",

"translation": "..."

}Their system prompt involves few-shot examples, which contain a mix of English and vernacular corrections to guide LLM behavior.

5. In-House LLM Hosting

Zepto uses Meta’s Llama3-8B, hosted on Databricks for cost control and performance. They use Instruct fine-tuning, which is a lightweight tuning using stepwise prompts and role-playing instructions. It ensures that LLM focuses only on prompt-level behavior, avoiding costly model retraining

6. Implicit Feedback via User Reformulations

User feedback is vital when your feature is still new. Each quick correction and better result Zepto users see counts as a valid fix. Gather these signals to add fresh few-shot examples to the prompt, drop new synonyms into the retrieval DB, and squash bugs. Zepto’s A/B test shows a 7.5 percent lift in conversion.

Replicating the Query Resolution System

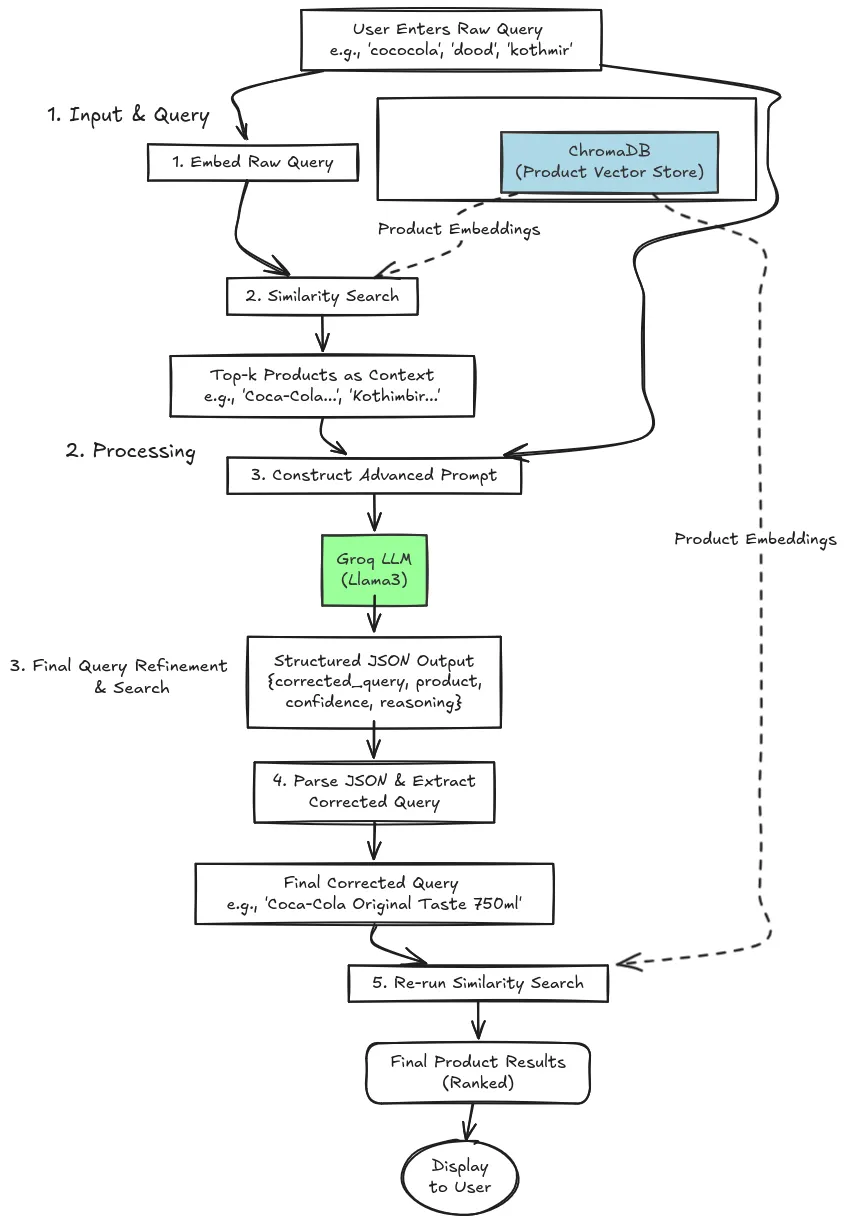

Now, we will try to replicate Zepto’s multilingual query resolution system by defining our system. Let’s have a look at the flow chart of the system below, which we are going to use.

Our implementation follows the same strategy outlined by Zepto:

- Semantic Retrieval: We first take the user’s raw query and find a list of top-k potentially relevant products from our entire catalog. This is done by comparing the query’s vector embedding against the embeddings of our products stored in a vector database. This step provides the necessary context.

- LLM-Powered Correction and Selection: The retrieved products (the context) and the original query are then passed to a Large Language Model (LLM). The LLM’s task is not just to correct spelling, but to analyze the context and select the most likely product the user intended to find. It then returns a clean, corrected query and the reasoning behind its decision in a structured format.

Procedure

The process can be simplified in the following 3 steps:

- Input and Query

The user enters the raw query, which may contain some noise or be in a different language. Our system directly embeds the raw query into multilingual embeddings. A similarity search is performed on the Chroma DB vector database that has some pre-defined embeddings. It returns the top k most relevant product embeddings.

- Processing

After retrieving the top-k product embeddings, feed them along with the noisy user query into Llama3 through an advanced system prompt. The model returns a crisp JSON holding the cleaned query, product name, confidence score, and its reasoning, letting you see exactly why it chose that brand. This ensures a transparent correction of the query in which we have access to the LLM’s reasoning why it selected this product and brand’s name as the corrected query.

- Final Query Refinement and Search

This stage involves the parsing of JSON output from the LLM, by extracting the corrected query, we have access to the most relevant product or brand name based on the raw query entered by the user. The last stage involves rerunning the similarity search on the Vector DB to find the details of the searched product. In this way, we will be able to implement the multilingual query resolution system.

Hands-on Implementation

We understood the working of our query resolution system, now let’s implement the system using code hands-on. We will be doing everything step by step, from installing the dependencies to the last similarity search.

Step 1: Installing the Dependencies

First, we install the necessary Python libraries. We’ll use langchain for orchestrating the components, langchain-groq for the fast LLM inference, fastembed for efficient embeddings, langchain-chroma for the vector database, and pandas for data handling.

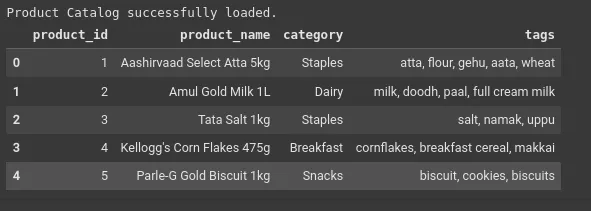

!pip install -q pandas langchain langchain-core langchain-groq langchain-chroma fastembed langchain-communityStep 2: Create an Expanded and Complex Dummy Dataset

To thoroughly test the system, we need a dataset that reflects real-world challenges. This CSV includes:

- A wider variety of products (20+).

- Common brand names (e.g., Coca-Cola, Maggi).

- Multilingual and vernacular terms (dhaniya, kanda, nimbu).

- Potentially ambiguous items (cheese spread, cheese slices).

import pandas as pd

from io import StringIO

csv_data = """product_id,product_name,category,tags

1,Aashirvaad Select Atta 5kg,Staples,"atta, flour, gehu, aata, wheat"

2,Amul Gold Milk 1L,Dairy,"milk, doodh, paal, full cream milk"

3,Tata Salt 1kg,Staples,"salt, namak, uppu"

4,Kellogg's Corn Flakes 475g,Breakfast,"cornflakes, breakfast cereal, makkai"

5,Parle-G Gold Biscuit 1kg,Snacks,"biscuit, cookies, biscuits"

6,Cadbury Dairy Milk Silk,Chocolates,"chocolate, choco, silk, dairy milk"

7,Haldiram's Classic Banana Chips,Snacks,"kele chips, banana wafers, chips"

8,MDH Deggi Mirch Masala,Spices,"mirchi, masala, spice, red chili powder"

9,Fresh Coriander Bunch (Dhaniya),Vegetables,"coriander, dhaniya, kothimbir, cilantro"

10,Fresh Mint Leaves Bunch (Pudina),Vegetables,"mint, pudhina, pudina patta"

11,Taj Mahal Red Label Tea 500g,Beverages,"tea, chai, chaha, red label"

12,Nescafe Classic Coffee 100g,Beverages,"coffee, koffee, nescafe"

13,Onion 1kg (Kanda),Vegetables,"onion, kanda, pyaz"

14,Tomato 1kg,Vegetables,"tomato, tamatar"

15,Coca-Cola Original Taste 750ml,Beverages,"coke, coca-cola, soft drink, cold drink"

16,Maggi 2-Minute Noodles Masala,Snacks,"maggi, noodles, instant food"

17,Amul Cheese Slices 100g,Dairy,"cheese, cheese slice, paneer slice"

18,Britannia Cheese Spread 180g,Dairy,"cheese, cheese spread, creamy cheese"

19,Fresh Lemon 4pcs (Nimbu),Vegetables,"lemon, nimbu, lime"

20,Saffola Gold Edible Oil 1L,Staples,"oil, tel, cooking oil, saffola"

21,Basmati Rice 1kg,Staples,"rice, chawal, basmati"

22,Kurkure Masala Munch,Snacks,"kurkure, snacks, chips"

"""

df = pd.read_csv(StringIO(csv_data))

print("Product Catalog successfully loaded.")

df.head()Output:

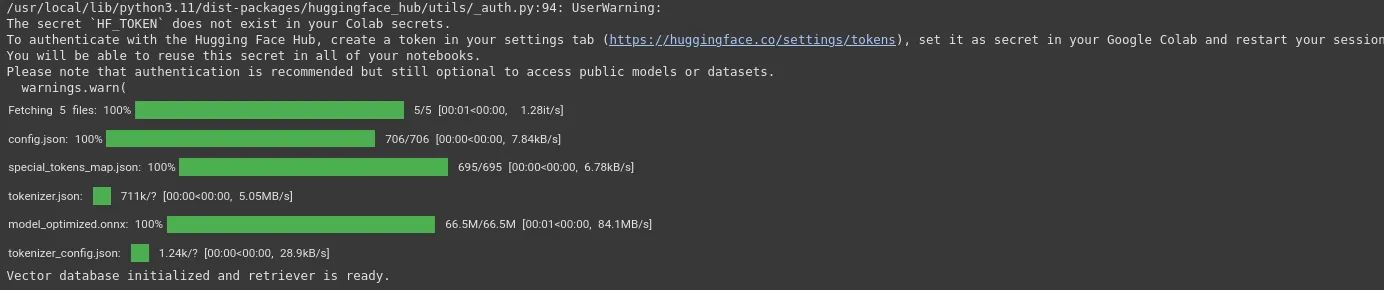

Step 3: Initialize a Vector Database

We will convert our product data into numerical representations (embeddings) that capture semantic meaning. We use FastEmbed for this, as it’s fast and runs locally. Store these embeddings in ChromaDB, a lightweight vector store.

Embedding Strategy: For each product, we create a single text document that combines its name, category, and tags. This creates a rich, descriptive embedding that improves the chances of a successful semantic match.

Embedding Model: We are using the BAAI/bge-small-en-v1.5 model here. The “small” version of the model is resource-efficient, fast, and a suitable embedding model for multilingual tasks. BAAI/bge-small-en-v1.5 is a strong English text embedding model and can be useful in certain contexts. It offers competitive performance in tasks involving semantic similarity and text retrieval.

import os

import json

from langchain.schema import Document

from langchain.embeddings import FastEmbedEmbeddings

from langchain_chroma import Chroma

# Create LangChain Documents

documents = [

Document(

page_content=f"{row['product_name']}. Category: {row['category']}. Tags: {row['tags']}",

metadata={

"product_id": row['product_id'],

"product_name": row['product_name'],

"category": row['category']

}

) for _, row in df.iterrows()

]

# Initialize embedding model and vector store

embedding_model = FastEmbedEmbeddings(model_name="BAAI/bge-small-en-v1.5")

vectorstore = Chroma.from_documents(documents, embedding_model)

# The retriever will be used to fetch the top-k most similar documents

retriever = vectorstore.as_retriever(search_kwargs={"k": 5})

print("Vector database initialized and retriever is ready.")Output:

If you are able to see this widget, that means you can download the BAAI/bge-small-en-v1.5 locally.

Step 4: Design the Advanced LLM Prompt

This is the most critical step. We design a prompt that instructs the LLM to act as an expert query interpreter. The prompt forces the LLM to follow a strict process to and return a structured JSON object. This ensures the output is predictable and easy to use in our application.

Key features of the prompt:

- Clear Role: The LLM is told it’s an expert system for a grocery store.

- Context is Key: It must base its decision on the list of retrieved products.

- Mandatory JSON Output: We instruct it to return a JSON object with a specific schema: corrected_query, identified_product, confidence, and reasoning. This is crucial for system reliability.

from langchain_groq import ChatGroq

from langchain_core.prompts import ChatPromptTemplate

# IMPORTANT: Set your Groq API key here or as an environment variable

os.environ["GROQ_API_KEY"] = "YOUR_API_KEY” # Replace with your key

llm = ChatGroq(

temperature=0,

model_name="llama3-8b-8192",

model_kwargs={"response_format": {"type": "json_object"}},

)

prompt_template = """

You are a world-class search query interpretation engine for a grocery delivery service like Zepto.

Your primary goal is to understand the user's *intent*, even if their query is misspelled, in a different language, or uses slang.

Analyze the user's `RAW QUERY` and the `CONTEXT` of semantically similar products retrieved from our catalog.

Based on this, determine the most likely product the user is searching for.

**INSTRUCTIONS:**

1. Compare the `RAW QUERY` against the product names in the `CONTEXT`.

2. Identify the single best match from the `CONTEXT`.

3. Generate a clean, corrected search query for that product.

4. Provide a confidence score (High, Medium, Low) and a brief reasoning for your choice.

5. Return a single JSON object with the following schema:

- "corrected_query": A clean, corrected search term.

- "identified_product": The full name of the single most likely product from the context.

- "confidence": Your confidence in the decision: "High", "Medium", or "Low".

- "reasoning": A brief, one-sentence explanation of why you made this choice.

If the query is too ambiguous or has no good match in the context, confidence should be "Low" and `identified_product` can be `null`.

---

CONTEXT:

{context}

RAW QUERY:

{query}

---

JSON OUTPUT:

"""

prompt = ChatPromptTemplate.from_template(prompt_template)

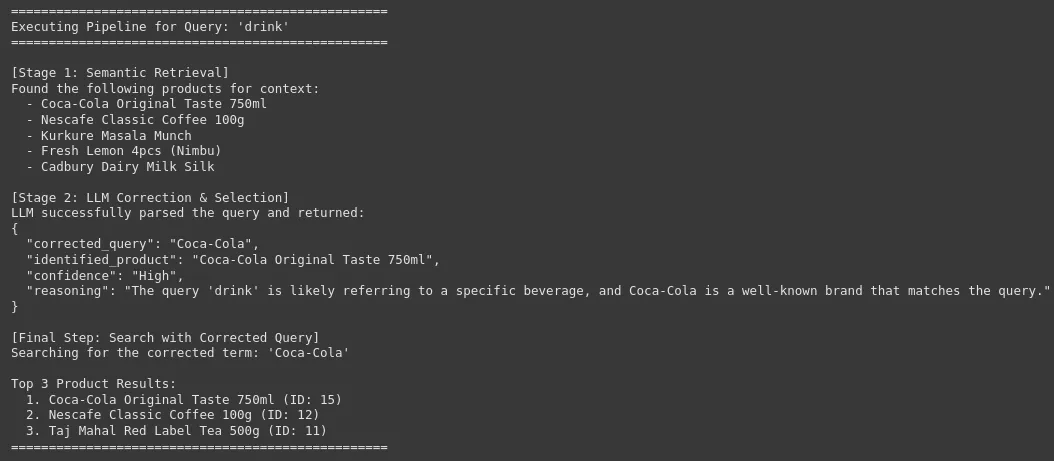

print("LLM and Prompt Template are configured.")Step 5: Creating the End-to-End Pipeline

We now chain all the components together using LangChain Expression Language (LCEL). This creates a seamless flow from query to final result.

Pipeline Flow:

- The user’s query is passed to the retriever to fetch context.

- The context and original query are formatted and fed into the prompt.

- The formatted prompt is sent to the LLM.

- The LLM’s JSON output is parsed into a Python dictionary.

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

def format_docs(docs):

"""Formats the retrieved documents for the prompt."""

return "\n".join([f"- {d.metadata['product_name']}" for d in docs])

# The main RAG chain

rag_chain = (

{"context": retriever | format_docs, "query": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

def search_pipeline(query: str):

"""Executes the full search and correction pipeline."""

print(f"\n{'='*50}")

print(f"Executing Pipeline for Query: '{query}'")

print(f"{'='*50}")

# --- Stage 1: Semantic Retrieval ---

initial_context = retriever.get_relevant_documents(query)

print("\n[Stage 1: Semantic Retrieval]")

print("Found the following products for context:")

for doc in initial_context:

print(f" - {doc.metadata['product_name']}")

# --- Stage 2: LLM Correction & Selection ---

print("\n[Stage 2: LLM Correction & Selection]")

llm_output_str = rag_chain.invoke(query)

try:

llm_output = json.loads(llm_output_str)

print("LLM successfully parsed the query and returned:")

print(json.dumps(llm_output, indent=2))

corrected_query = llm_output.get('corrected_query', query)

except (json.JSONDecodeError, AttributeError) as e:

print(f"LLM output failed to parse. Error: {e}")

print(f"Raw LLM output: {llm_output_str}")

corrected_query = query # Fallback to original query

# --- Final Step: Search with Corrected Query ---

print("\n[Final Step: Search with Corrected Query]")

print(f"Searching for the corrected term: '{corrected_query}'")

final_results = vectorstore.similarity_search(corrected_query, k=3)

print("\nTop 3 Product Results:")

for i, doc in enumerate(final_results):

print(f" {i+1}. {doc.metadata['product_name']} (ID: {doc.metadata['product_id']})")

print(f"{'='*50}\n")

print("End-to-end search pipeline is ready.")Step 6: Demonstration & Results

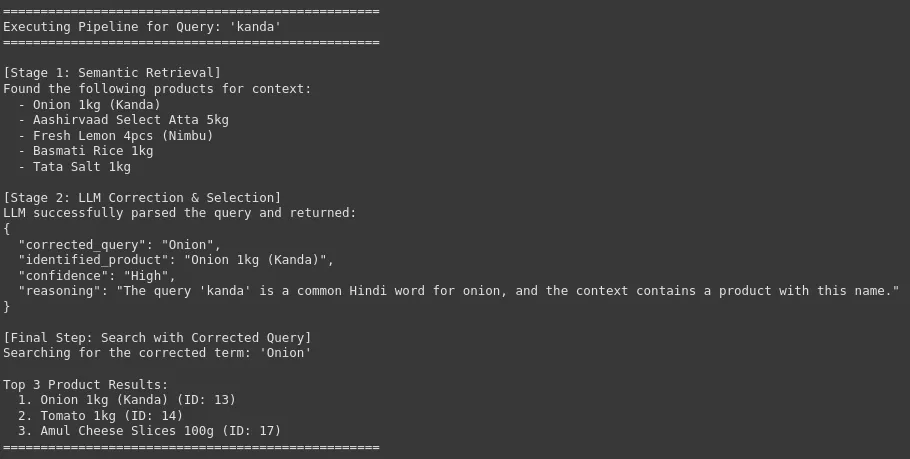

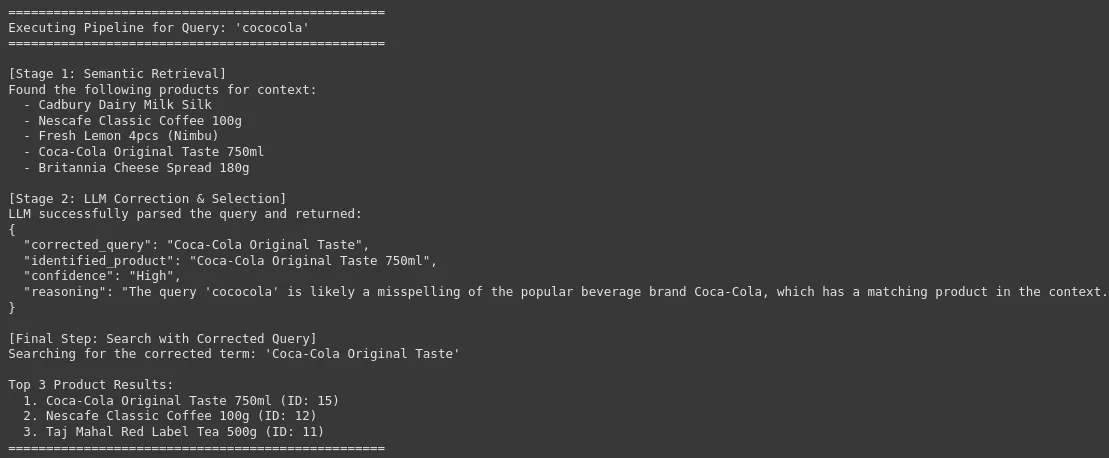

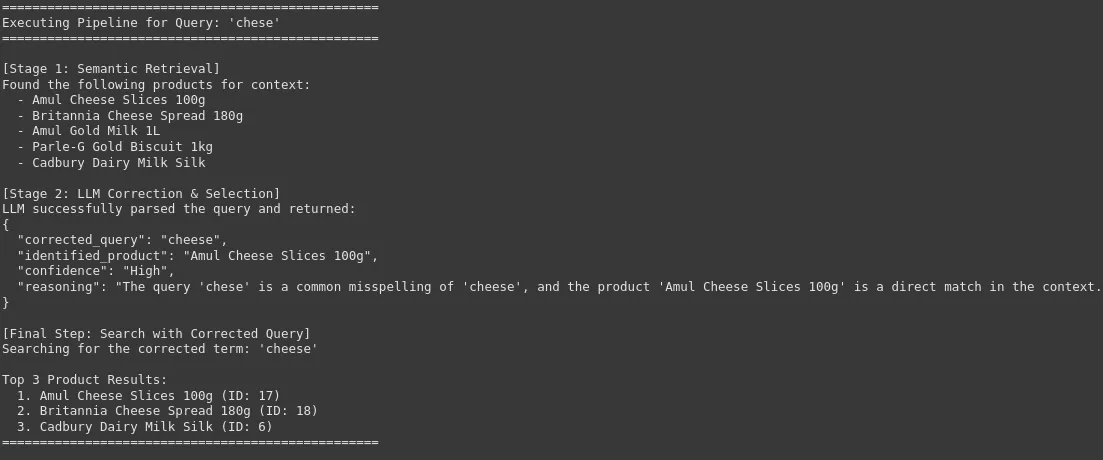

Now, let’s test the system with a variety of challenging queries to see how it performs.

# --- Test Case 1: Simple Misspelling ---

search_pipeline("aata")

# --- Test Case 2: Vernacular Term ---

search_pipeline("kanda")

# --- Test Case 3: Brand Name + Misspelling ---

search_pipeline("cococola")

# --- Test Case 4: Ambiguous Query ---

search_pipeline("chese")

# --- Test Case 5: Highly Ambiguous / Vague Query ---

search_pipeline("drink")Output:

We can see that our system can correct the raw and noisy user query with the exact and corrected brand or product name, which is crucial for high-accuracy product search in an e-commerce platform. This leads to improvement in user experience and a high conversion rate.

You can find the full code inside this Git repository.

Conclusion

This multilingual query resolution system successfully replicates the core strategy of Zepto’s advanced search system. By combining fast semantic retrieval with intelligent LLM-based analysis, the system can:

- Correct misspellings and slang with high accuracy.

- Understand multilingual queries by matching them to the correct products.

- Disambiguate queries by using retrieved context to infer user intent (e.g., choosing between “cheese slices” and “cheese spread”).

- Provide structured, auditable outputs, showing not just the correction but also the reasoning behind it.

This RAG-based architecture is robust, scalable, and demonstrates a clear path to significantly improving user experience and search conversion rates.

Frequently Asked Questions

A. RAG enhances LLM accuracy by anchoring it to real catalog data, avoiding hallucination and excessive prompt size

A. Instead of bloating prompts, inject only the top relevant brand terms via the retrieval step.

A. A multilingual Sentence‑Transformer model, like BAAI/bge-small-en-v1.5, optimized for semantic similarity, works best for noisy and vernacular inputs.

Login to continue reading and enjoy expert-curated content.